About five years ago I wrote about vector format, and how it is not quite well suited to be used for application icons. Five years is a long time in our industry, and the things have changed quite dramatically since then. That post was written when pretty much all application icons looked like this:

Some of these rich visuals are still around. In fact, the last three icons are taken from the latest releases of Gemini, Kaleidoscope and Transmit desktop Mac apps. However, the tide of flat / minimalistic design that swept the mobile platforms in the last few years has not spared the desktop. Eli Schiff is probably the most vocal critic of the shift away from the richness of skeuomorphic designs, showing quite a few examples of this trend here and here.

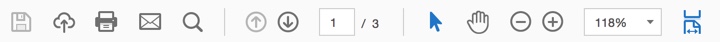

Indeed it’s a rare thing to see multi-colored textured icons inside apps these days. On my Mac laptop the only two holdouts are Soulver and MarsEdit. The rest have moved on to much simpler monochromatic icons that are predominantly using simple shapes and a few line strokes. Here is Adobe Acrobat’s toolbar:

and Evernote:

This is Slack:

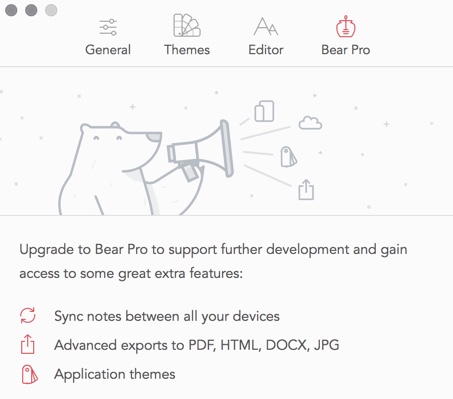

and Bear:

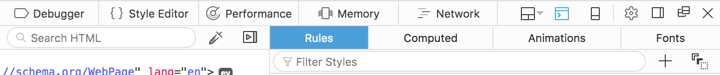

Line icons are dominating the UI of the web developer tools of Firefox:

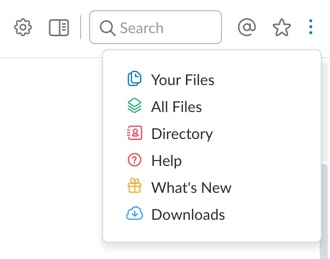

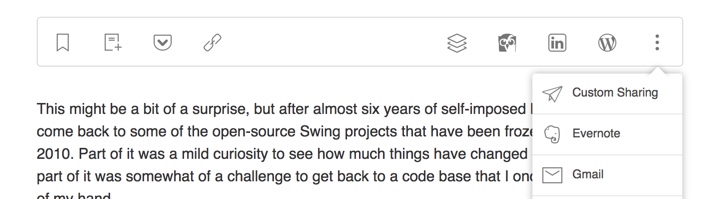

They also have found their way to the web sites. Here is Feedly:

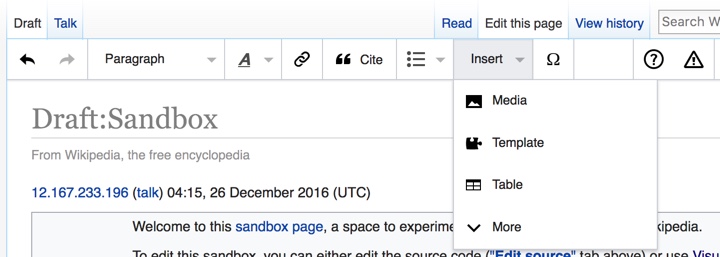

and Wikipedia’s visual editor:

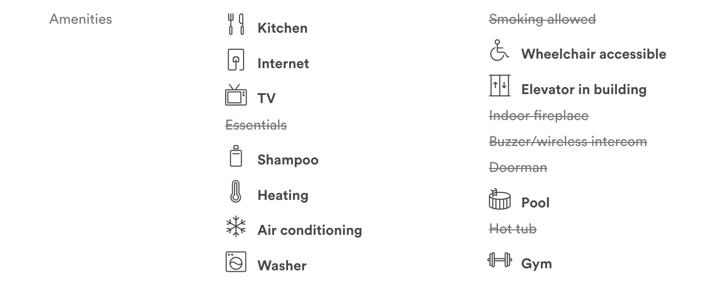

You can also see this style on Airbnb listing pages (with a somewhat misbalanced amenities list):

and, to a smaller extent, on other websites such as Apple’s:

Google’s Material design places heavy emphasis on simple, clean icons:

They can be easily tinted to conform to the brand guidelines of the specific app, and lend themselves quite easily to beautiful animations. And, needless to say, the vector format is a perfect match to encode the visuals of such icons.

Trends come and go, and I wouldn’t recommend making bold predictions on how things will look like in another five years. For now, vector format has defied the predictions I’ve laid out in 2011 (even though I’ve ended that post by giving myself a way out). And, at least for now, vector format is the cool new kid.

This might be a bit of a surprise, but after almost six years of self-imposed hiatus I’ve decided to come back to some of the open-source Swing projects that have been frozen in time since late 2010. Part of it was a mild curiosity to see how much things have changed in the meanwhile, and part of it was somewhat of a challenge to get back to a code base that I once knew like the back of my hand.

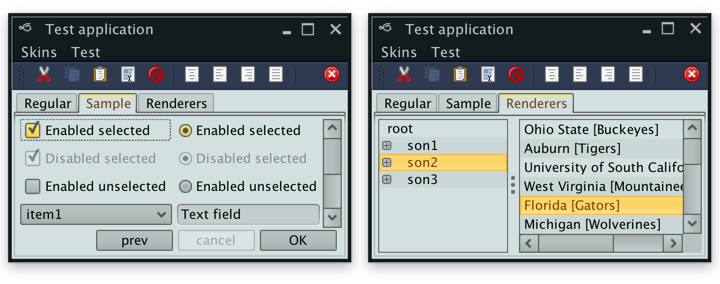

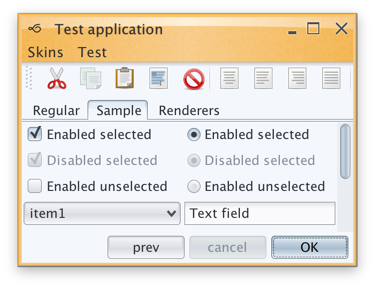

Before delving into the rest of this rather lengthy post, a fair warning. The images seen here are only meant to be viewed on high-resolution / retina / 2x-density screens. Otherwise what you’re seeing is a scaled down version of the original images that has little to do with the actual visuals seen on those screens.

Having said that, what is you see above is what I saw a couple of months ago on my 2013 MacBook Pro that has a Retina screen. Having spent the last four years looking at crisp visuals of pretty much all the native apps (except for Eclipse that is just now starting to get there, but then again calling Eclipse a native app is somewhat of a stretch), this was painful. There are obvious line artifacts, with the two most noticeable being the light outline along left/top edges of the main window and a darker horizontal line across the selected tab. But other that that, everything is just too blocky, pixelated and way too fat.

Two months later, I’m happy to report that Substance is a fully fledged citizen of the Retina universe. Given the amount of work that went into making that happen, the next release will have a version bump to 7.0 code-named Uruguay. The three big themes of this release will be as follows.

Hi DPI support

Searching the web for the initial pointers on Hi DPI support in Swing got me to this post from 2013 by Konstantin Bulenkov. Konstantin is the team lead at Jetbrains – the company behind the IntelliJ platform and all the apps built on top of that platform. Did you know that IntelliJ IDEA is a Swing app? If you didn’t, now you know.

Lucky for me, the code referenced in that post is part of the community edition of IDEA and is available under the quite permissive Apache license. And even though some part of that code is using reflection to query the underlying capabilities, if it’s working for IDEA with its hundreds of thousands of active users, it’s certainly good enough for me.

This code now powers pretty much all the pixels you see in Substance 7.0 – once again, the same warning about images that are meant to be viewed only on retina / high-res screens applies to all the images in this post.

Continue reading »

As usual, DragonCon comes to Atlanta over the Labor Day weekend. These are my personal highlights from the opening parade this year.

Continue reading »

Continue reading »

Around 2005 I was really into the whole field of non-photorealistic rendering (NPR). I’ve pored over dozens of research papers and spent months on implementing some of the basic building blocks and combining them together to recreate the results from a couple of those papers. It even got as far as submitting a talk proposal to SIGGRAPH of that year. I thought I had an interesting approach. All of the reviewers strongly disagreed.

Edge detection was one of the building blocks that I kept on thinking about long after that rejection. A bit later I started working on a completely different project – exploring genetic algorithms. The idea there is that instead of coming up with an algorithm that correctly solves a problem, you randomly mutate parts of the algorithm generation that you currently have and evaluate the performance of each mutation. The hope is that eventually these random modifications “find” a path towards the optimal solution that works for your input space. You might not understand exactly what’s going on, but as long as it gives you the right answers, that part might not be that important.

My homegrown implementation was to come up a set of computation primitives – basic arithmetic operations, a conditional and a loop – and let it work on “solving” rather simple equations. It was rather slow as I was basically doing my custom completely unoptimized VM on top of Java’s own VM. As I started spending less time working on it and more time just lazily thinking about it, I kept on bouncing two things in my head. One was to switch my genetic engine to work at the level of JVM bytecode operations. Instead of having a double-decker of VMs, the genetic modifications and recombinations would be done at the bytecode level, and then fed directly to the JVM. The second idea was to switch to doing something a bit more interesting – putting that genetic engine to work on image edge detection.

I’ve spent months thinking about various aspects of what could be done with such an engine, and how novel the entire thing would be when it’s all done. But I never got around to actually doing it.

Around 2007 as I was in the middle of working on a bunch of libraries for Swing (a few extra components, an animation module and a look-and-feel library), I fell in love with the idea outlined by some of the presentations around Windows Vista. I wrote about that in more detail a few years ago, but the core of it is rather simple – instead of drawing each UI widget as its own thing, you create a 3D mesh model of the entire UI, throw in a few lights and then hand it over to the rendering engine to draw the whole window.

If you have two buttons side by side, with just enough mesh detail and reflection on texture you can have buttons reflecting each other. You can mirror and distort the mouse cursor as it moves over the widget plane. As the button is clicked, you distort the mesh at the exact spot of the click and then bounce it back. Lollipop ripples, anyone?

I’ve spent months thinking about various aspects of what could be done with such an engine, and how novel the entire thing would be when it’s all done. But I never got around to actually doing it.

Around 2010 as I wound up all my Swing projects, I decided that it would be a good experience for me to dip my toes into the world of Javascript. So I took the Trident animation library that was written in Java (with hooks into Swing and SWT) and ported it to Javascript. It was actually my most-starred project on Github after it hit a couple of minor blogs.

I don’t know how things in the JS land are looking now, but back in 2010 I wanted a bit more from the language, especially around partitioning functionality into classes. Prototype-based inheritance was there, but it was quite inadequate for what I wanted. It probably was my fault, as I kept on going against the grain of the language. As the initial excitement started wearing down, I considered where I wanted to take those efforts. In my head I kept on going back to the demos I did for Trident JS, and particularly the Canvas object that was at the center of all of them.

Back in the Swing days my two main projects were the look-and-feel library (Substance) and a suite of UI components that had the Office ribbon and all the supporting infrastructure around them (Flamingo). So as I started spending less time working on the code and more time just lazily thinking about it, I thought about writing a UI toolkit that would combine everything that I’ve worked on in Swing and bring it to Javascript. It would have all the basic UI widgets – buttons, checkboxes, sliders, etc. It would all be skinnable, porting over the code that I already had in place in Substance. Everything would have animations from the port of Trident. I was already familiar with the complexity of custom event handling (keyboard / mouse) for the ribbon component in Flamingo. And it would all be implemented at the level of the global Canvas object that would host the entire UI.

I’ve spent months thinking about various aspects of what could be done with such an engine, and how novel the entire thing would be when it’s all done. But I never got around to actually doing it. Flipboard did that five years later. The web community wasn’t very pleased about it.

Some say that ideas are cheap. I wouldn’t go as far as that, even though I might have said it a few times in the past. I’d say that there are certainly brilliant ideas, and the people behind them deserve the full credit. But only when those ideas are put into reality. Only when you put in the time and the effort into making those ideas actually happen. Don’t tell me what you’re thinking about. Show me what you did with it. For now I’m zero for three on my grand ones.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()