Continuing the ongoing series of interviews with creative artists working on various aspects of movie and TV productions, it is my pleasure to welcome Hillary Spera. In this interview she talks about the hidden complexity of what goes on behind the scenes to bring these stories to our screens, digital vs film, the current production landscape as the Corona-related restrictions are being slowly lifted, and her life-long passion of capturing images. Around these topics and more, Hillary dives deep into her work on the recently released “The Craft: Legacy”.

Kirill: Please tell us about yourself and what drew you into this field.

Hillary: I didn’t know early that I wanted to be a cinematographer, but I was always inspired by and interested in still photography. As a kid, I always picking up a camera and took photos whenever I could. It was my favorite way to get out into the world and experience it, through a lens. I had no idea what I was doing, but I was really compelled by it.

When I went to college, still photography continued to be my passion. I did it on my own as a hobby, as I was never formally trained other than a class in high school. There was a lot of trial and error. That college didn’t have a photography program that I was interested in, so I stumbled into cinematography as a way to make images, to tell stories visually. That’s when I found my love for it. It was really fun, I also loved the aspect of collaboration, being part of a team. I ended up shooting everything I could get my hands on, always being present for that, taking every opportunity and never saying no. That’s what took me down the road to being a cinematographer. I never went to grad school for cinematography. I learned from just doing it, from being on set and shooting everything that I could. Making a lot of mistakes and learning from them. And continuing to shoot still photography as well.

Kirill: If you look at the evolution of technology, do you feel that it would be easier for you to get into the field today, as cameras become more affordable and some people are even shooting on their iPhones?

Hillary: I’ve thought about this a lot. I don’t know if I’d be as motivated to get into it now. I love making images. I’ve always made images and I always will. Even on my days off I’m shooting. But I came from film and I love the physical celluloid aspect of it, the tangibility of that. I’m not sure I would have gotten into it if the door had been through an iPhone instead of through film. That said, I do want to think that a love of making images would have prevailed, somehow.

I love that it’s easier now than ever, that it is accessible to pretty much everyone who has an interest. You see so many talented people shooting all kinds of stories, so many viewpoints. It’s also easier (somewhat) to make films now, and there’s more avenues to show them. You have a million streaming platforms, so many film festivals, all these places to put things out into the world. But if I’m talking about myself, I fell in love with this analog way, and I don’t know if I would have the same relationship to it without starting that way. I learned by splicing 16mm movies together [laughs], and I feel that is what taught me so much about the craftsmanship of it, about understanding light and exposure and just the respect for the process.

(Left to right) Lourdes (Zoey Luna) Frankie (Gideon Adlon) Tabby (Lovie Simone) and Lily (Cailee Spaeny) perform rituals and talk about being cautious with their gifts in Columbia Pictures’ “The Craft: Legacy.

Kirill: Would you say that the field of cinematography is losing something significant as the medium film is fading away?

Hillary: It’s our challenge and responsibility to bring that to the digital world. At least from my perspective, the challenge is to always make it feel like it has the same weight to it as the films we leaned from, those often shot on film. We work hard to take the digital edge off and make it, at the very least, feel like a hybrid between the two worlds. The tangibility. Often the reference is the look of 35mm, what we did for “The Craft: Legacy”. We wanted to feel like it had the tangibility and texture of being shot on film, to feel that grain, to feel those values.

I don’t know if it’s losing something. I’d like to think that the spirit stays alive in the process and the collective references we all seek and possess. I think it’s our responsibility to continue the traditions. Visual storytelling is visual storytelling, and the medium almost doesn’t matter. It might be shot on iPhone, or on the biggest large format digital sensor, or on 35mm film. Our responsibility as cinematographers is to tell the story through images.

Kirill: How do you talk with people about what you do for a living?

Hillary: I have a lot of people in my life who are do not come from a film background. It’s a good question.

On the base level, cinematographer is involved with everything visual about the project, and that part is one of my favorite things about the craft. The collaboration with directors, production designers, costume designers, gaffers, key grips, camera team, sound effects, visual effects, stunts, etc – that is all involved and part of my responsibility as a cinematographer. Anything that relates to the image.

Getting deeper into it, my responsibility is also to watch, to listen and to interpret vision. That’s the fundamental part. There is this collective vision for the project, and my job is to interpret that and bring that visually to the project. And so much of it is managing, getting everyone on the same page to be working towards the same goal, and that collaboration. It always vacillates between the technical/managerial and the creative.

(Left to right) Lourdes (Zoey Luna) Frankie (Gideon Adlon) and Tabby (Lovie Simone) need a fourth to complete their coven in Columbia Pictures’ “The Craft: Legacy”.

Continue reading »

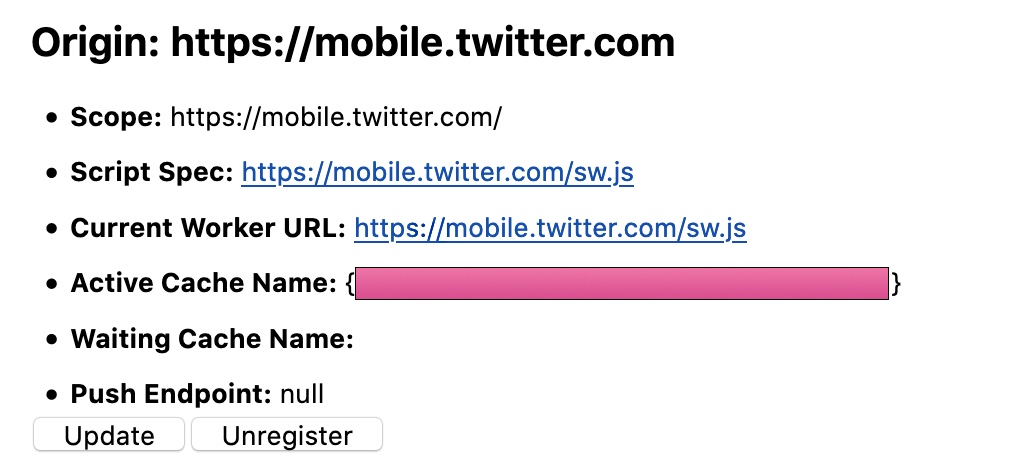

This started happening a few weeks ago. Using Firefox to load Twitter’s webpage would display this text message:

Can’t load twitter : https://twitter.com/ has experienced a network protocol violation that cannot be repaired.

One (rather annoying) way to go around it is to Shift+Reload the page, but you need to do it every single time you load the main stream or just a single tweet. Another, and a more permanent way is to type about:serviceworkers into the address bar, and then search for “twitter” in that page. You should see something like this:

Click the “Unregister” button and you should be good to go.

Substance comes with built-in support for animating state transitions for the core Swing components, as well as for all the Flamingo components.

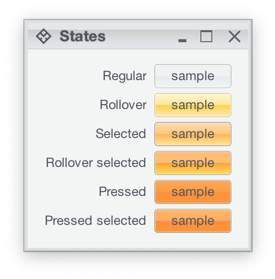

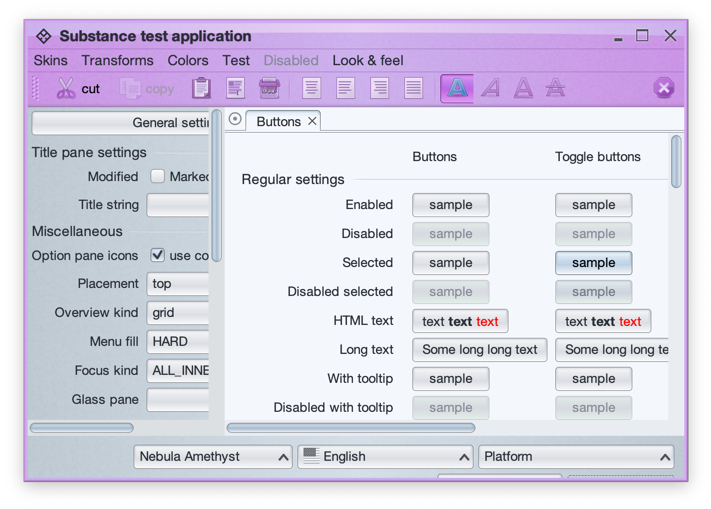

Let’s take a look at this screenshot from the component states documentation:

Here we see how one of the Substance skins (Office Silver 2007) provides different visuals for different component states – light yellow for rollover, light orange for selected, deeper orange for pressed, etc. As the component – such as JButton in this case – reacts to the user interaction and changes state, Substance smoothly animates the visuals of that component to reflect the new state. In the case of our JButton, that includes the inner fill, the border and the text.

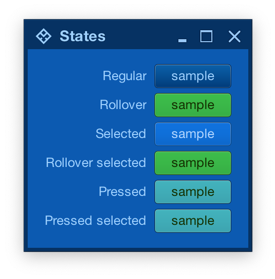

This comes particularly handy for skins that mix light and dark visuals for different states of the same component. Here is the same UI under the Magellan skin:

Note how the selected button uses light text on dark background, while the rollover selected button uses dark text on light background. If your UI uses the Magellan skin (or any other skin that uses a mix of light and dark color schemes), Substance will do the right thing to animate all relevant parts of your UI as the user interacts with it.

What about the icons?

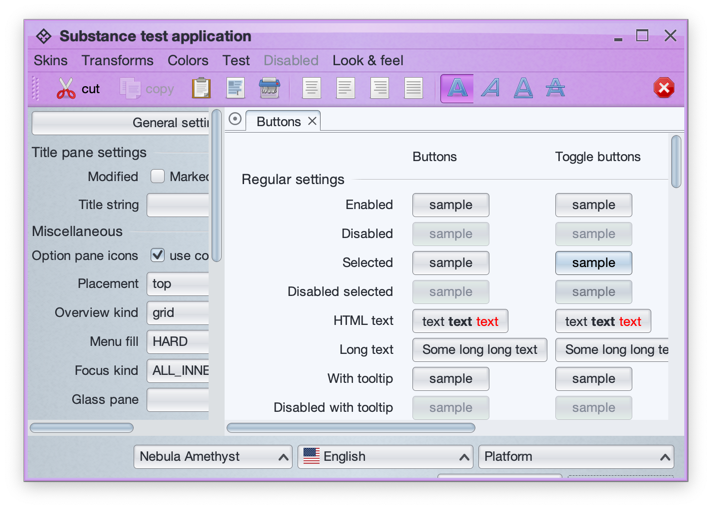

This is a screenshot of the main Substance demo app running under the Nebula Amethyst skin. As UIs with lots of icons can get pretty busy, Substance provides APIs to theme the icons based on the current skin visuals.

The APIs are in the SubstanceCortex class, one in the GlobalScope to apply on all icons in your app, and one in the ComponentScope to configure icon theming for the specific control:

SubstanceCortex.GlobalScope.setIconThemingType for global icon themingSubstanceCortex.ComponentScope.setIconThemingType for per-component icon theming

At the present moment, two icon theming types are supported. The first is SubstanceSlices.IconThemingType.USE_ENABLED_WHEN_INACTIVE which works best for multi-color / multi-tone icons and Substance skins with multi-color color schemes. Here is the same UI with this icon theming type:

Take a look at the icons in the toolbar. The icon for the active / selected button (bold A) is still in full original color, but the rest of the icons have been themed with the colors of the enabled color scheme configured for the toolbar area of the Nebula Amethyst skin. This work well in this particular case because:

- The original Tango icons have enough contrast to continue being easily recognizable when they are themed in what is essentially a mono-chrome purple color palette

- Nebula Amethyst skin uses color schemes with different background colors for the ultra light to ultra dark range, so that when these colors are used to theme the original icons, the icons remain crisp and legible.

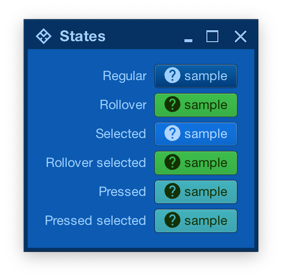

The second option is SubstanceSlices.IconThemingType.FOLLOW_FOREGROUND which works best for single-tone icons found in such popular icon packs as Material, Ionicons, Friconix, Flexicons, Font Awesome and many others:

In this screenshot of the same UI from earlier under the Magellan skin, all the buttons use the same help icon from the Material icon pack converted by Photon. At runtime, Substance themes the icon to follow the foreground / text color of the button for a consistent look across all component states.

Checking in on an earlier post from last year on being skeptical on the whole self-driving cars thing, I got reminded of this article from last April and the quote from Elon Musk:

As for full autonomy, Musk noted: “the software problem should not be minimized.” He continued that, “it’s a very difficult software problem.” Still, he promised that Teslas will be capable of self-driving by the end of this year and self-driving robo-taxis will be on the road in 2020. Also, in two years, the company will be making cars without steering wheels or pedals at all.

“If you fast forward a year, maybe a year three months, we’ll have over a million robo-taxis on the road.”

Usually futurists give themselves a bit more breathing room, say 25-30 years. By which time they are either no longer with us, or can claim to have been let down by the inept technologists who have failed to deliver on their “vision”. In this particular case though, Tesla is barely struggling to get to level 2 which is basic, partial automation. We’re quite a long way off from a million robo-taxis on the road.

In the meanwhile, Tesla’s official page on autopilot is using some clever wording that makes it seem that they are already at full self driving level 5 (highlights mine):

All new Tesla cars have the hardware needed in the future for full self-driving in almost all circumstances. The system is designed to be able to conduct short and long distance trips with no action required by the person in the driver’s seat.

All you will need to do is get in and tell your car where to go. If you don’t say anything, the car will look at your calendar and take you there as the assumed destination or just home if nothing is on the calendar. Your Tesla will figure out the optimal route, navigate urban streets (even without lane markings), manage complex intersections with traffic lights, stop signs and roundabouts, and handle densely packed freeways with cars moving at high speed. When you arrive at your destination, simply step out at the entrance and your car will enter park seek mode, automatically search for a spot and park itself. A tap on your phone summons it back to you.

Sprinkle a few “will”s in there, and you make it sound like it’s yours once you buy one of their cars. While the press kit is a bit more realistic, bringing it down quite a few notches (highlights mine):

Autopilot is an advanced driver assistance system that is classified as a Level 2 automated system according to SAE J3016, which is endorsed by the National Highway Traffic Safety Administration. This means Autopilot also helps with driver supervision. One of our main motivations for Autopilot is to help increase road safety, and it’s this philosophy that drives our development, validation, and rollout decisions.

Autopilot is intended for use only with a fully attentive driver who has their hands on the wheel and is prepared to take over at any time. While Autopilot is designed to become more capable over time, in its current form, it is not a self-driving system, it does not turn a Tesla into an autonomous vehicle, and it does not allow the driver to abdicate responsibility.

So maybe, at some point in the future, it “will” be there. But not any day now, despite the ongoing barrage of lofty promises.

![]()

![]()

![]()