Over the course of this week i’ve talked about movements of physical objects in the real world, and how they can be applied to animating pixels on the screen. The last two entries have just skimmed the surface of animating UI objects, and even such straightforward areas as color animations and scroll animations can be much deeper and more complicated than it originally seems.

Every movement in the real world is governed by the laws of physics. Sometimes these laws are simple, and sometimes they are not. Understanding and emulating these laws in the virtual world of pixels takes time. It takes time to analyze how the objects in the physical world move. It takes time to find the right physical model for the specific dynamic change on the screen. It takes time to implement this physical model in the code. It takes time to optimize the implementation performance so that it is fluid and does not drain too much device power. Is it worth it?

Is it worth spending your time as a designer? It it worth spending your time as a programmer? Is it worth spending your time as a tester? If you care about your users, the answer is a resounding yes.

People don’t read documentation. Nobody has time do to it, and it gets worse with every passing year. We are confronted with too much information, and the average attention span keeps on shrinking. A recent trend towards separation between data providers and application providers makes it incredibly simple for people to switch between different views on the data of their interest (think Twitter clients, for instance). People will start using your application, play with it for a few moments (minutes, if you’re lucky) and move on to the next shiny thing on a whim. How can you capture such a volatile audience?

Intuitive design is a popular term in the user experience community. Alan Cooper writes the following about intuition in his About Face 3:

Intuition works by inference, where we see connections between disparate objects and learn from these similarities, while not being distracted by their differences. We grasp the meaning of the metaphoric controls in an interface because we mentally connect them with other things that we have already learned. This is an efficient way to take advantage of the awesome power of the human mind to make inferences. However, this method also depends on the idiosyncratic human minds of users, which may not have the requisite language, knowledge, or inferential power necessary to make those connections.

What does it mean that the given interface is intuitive? You click on a button and it does what you expected to. You want to do something, and you find how to do it in the very first place you looked at. The only surprises that you see are the good ones. The application makes you feel good about yourself.

This is definitely not easy. And you must use every available tool that you can find. How about exploiting the user itself to make your job easier? How about building on the existing knowledge of your users and their experiences with related tools in either real or virtual domain? As quoted above, not all knowledge and not all experiences are universal, but the animations are.

We all live on the same planet, and we are all governed by the same physical laws. Applying these laws to the changes in your application (in color, shape, position etc) will build on the prior knowledge of how things work in the real world. Things don’t move linearly in the real world, and doing so on the screen will trigger a subconscious response that something is wrong. Things don’t immediately change color in the real world, and that’s another trigger. Moving objects cannot abruptly change direction in the real world, and that’s one more trigger. A few of these, and your user has moved on.

Drawing on the existing user experience is an incredibly powerful tool – if used properly. Some things are universal, and some things change across cultures. Distilling the universal triggers and transplanting them to your application is not an easy task. It requires a great deal of time and expertise from both the designers and programmers. And if you do it right, you will create a friendly and empowering experience for your users.

In a roundabout way, this brings me to the visual clues that are pervasive throughout the Avatar movie. If you haven’t seen it yet, you may want to stop reading – but i’m not going to reveal too much. Our first exposure to the Neytiri – the native Na’vi – is around half an hour into the movie. Apart from saving Jake’s life, she is quite hostile, and she does not hide it from him. What i find interesting is how James Cameron has decided to highlight her hostility. It is not only through her words and acts, but also through the body language, the hand movements and the facial expressions. They are purposefully inhuman – in the “not regularly seen done by humans” sense of word. The way she breaks the sentences and moves her upper body with the words, the movement of facial muscles when she tells him that he doesn’t belong there, and the hand gestures used throughout their first encounter certainly do not make the audience relate to her. On the contrary, the first impression reinforced by her physical attitude is that of hostility, savageness and animosity.

In a roundabout way, this brings me to the visual clues that are pervasive throughout the Avatar movie. If you haven’t seen it yet, you may want to stop reading – but i’m not going to reveal too much. Our first exposure to the Neytiri – the native Na’vi – is around half an hour into the movie. Apart from saving Jake’s life, she is quite hostile, and she does not hide it from him. What i find interesting is how James Cameron has decided to highlight her hostility. It is not only through her words and acts, but also through the body language, the hand movements and the facial expressions. They are purposefully inhuman – in the “not regularly seen done by humans” sense of word. The way she breaks the sentences and moves her upper body with the words, the movement of facial muscles when she tells him that he doesn’t belong there, and the hand gestures used throughout their first encounter certainly do not make the audience relate to her. On the contrary, the first impression reinforced by her physical attitude is that of hostility, savageness and animosity.

The story, however, requires you to associate with the plight of the locals, and root against the invasion of the humans who do not understand the spiritual connectivity of the Na’vi world. The love interest between Jake and Neytiri is a potent catalyst, and it is fascinating to see how Cameron exploits the human emotions and makes you – in words of Colonel Quaritch – betray your own race. If you have seen the movie, imagine what it would feel like if Na’vi looked like real aliens – from Ridley Scott / David Fincher / the very same James Cameron saga. It would certainly cost much less money to produce, but would you feel the same seeing two ugly aliens falling in love and riding equally ugly dragons?

Na’vi look remarkably humanoid – just a little taller. The only outer difference is the tail and the number of fingers. Other than that – a funky (but not too funky) skin color, the same proportion of head / limbs / torso, the same facial features, no oozing slime and the same places where the hair doesn’t grow. It has certain technical advantages – mapping the movements of real actors onto the Na’vi bodies – including the facial expressions. In Avatar, however, there is a much deeper story behind the facial expressions. Cameron starts building on your prior experience with outward expression of human emotion in order to build your empathy towards Na’vi cause, and make you root for them in the final battle scene. How likely is it that Na’vis have developed not only the same body structure, but the same way positive emotions are reflected with facial expressions?

Na’vi look remarkably humanoid – just a little taller. The only outer difference is the tail and the number of fingers. Other than that – a funky (but not too funky) skin color, the same proportion of head / limbs / torso, the same facial features, no oozing slime and the same places where the hair doesn’t grow. It has certain technical advantages – mapping the movements of real actors onto the Na’vi bodies – including the facial expressions. In Avatar, however, there is a much deeper story behind the facial expressions. Cameron starts building on your prior experience with outward expression of human emotion in order to build your empathy towards Na’vi cause, and make you root for them in the final battle scene. How likely is it that Na’vis have developed not only the same body structure, but the same way positive emotions are reflected with facial expressions?

Our ability to relate to other human beings is largely based on our own experiences of pain, sorrow, joy, love and other emotions. Neytiri displays remarkably human like emotions – especially throughout the Ikran taming / flying scene half way into the movie. Cameron uses our own human emotions to guide us into believing in Na’vi cause, and this is achieved by building on the universally human vocabulary of facial expressions. To believe the story, we must believe in the characters, and what better way to do so than making us associate with both sides of the relationship between Jake and Neytiri.

Make your users productive. Make them happy that they have spent time in your application. Make them want to come back and use your other products. Make an emotional connection. Build on what they know. Make them believe that every choice they make is their own. Or better yet, guide them towards where you want them to go while making them believe that they are in charge.

After seeing how the rules of physical world can be applied to animating colors, it’s time to talk about layout animation. If you’re a programmer, it’d be safe to say that you spend most of your waking hours in your favorite IDE – and if you’re not, you should :) Writing and modifying code in the editor part of IDE takes, quite likely, most of the time you spend in the IDE. A quite unfortunate characteristic of such an environment is that the UI around you is highly static. Of course, you see bunch of text messages being printed to different views, or search view being populated with the search results, or problems view showing new compile errors, or maybe you invoke a few configuration dialogs and check a couple of settings. All of these, however, do not change the layout of the views that you are seeing.

The rest of the world spends their time outside IDE, browsing their Twitter / Facebook / … streams, updating the Flickr / Picasa albums, downloading songs on iTunes, tuning into Pandora, making selections in the Netflix queues and what not. These activities are highly dynamic, and feature “information tiles” – data areas that display information on the status, pictures, songs, or movies that are relevant to the current view. While the amount of available items is certainly finite, it does not – and should not – fit on a single screen. Thus, activities such as searching, filtering and scrolling are quite common. Let’s take a look at one such activity.

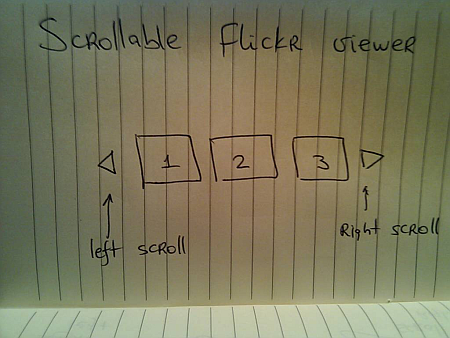

Suppose you have a scrollable widget that shows three pictures from your favorite Flickr stream in your blog footer:

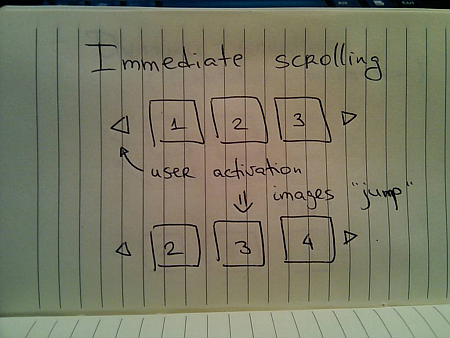

Here, the user can activate the left / right scroll buttons to view the next / previous batch of pictures – scrolling either one by one, or page by page. One option is to scroll immediately – as soon as the user activates the scroll button, the images just “jump” to the new locations:

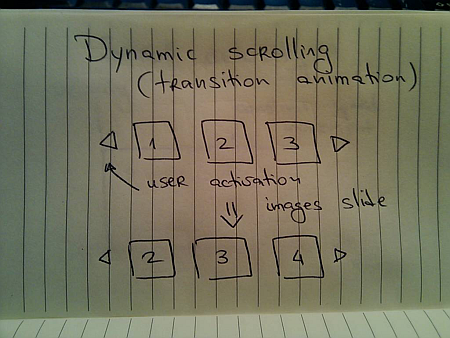

Simple to implement, but very user-unfriendly. You’re making the user remember the previous state of the application – where was each image displayed, and make the mental connection between old state, pressing the button and the new state. It would be fairly correct to say that immediate scroll is a persona non grata in the RIA world:

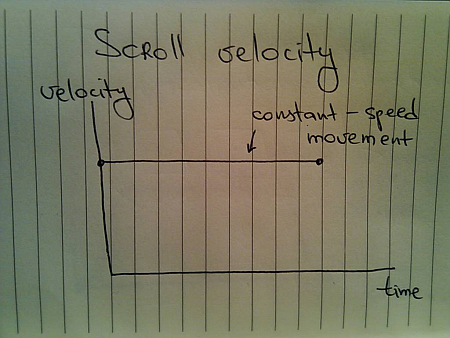

Now that you know that you want to slide the images, the next question is how to do it. Going back to the previous entries, a typical programmer’s solution is constant-speed scroll:

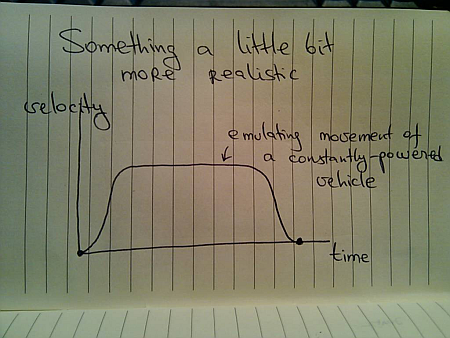

If you’ve followed this series so far, you know that this will not feel natural since it does not properly emulate movement of physical objects. How about emulating the movement of a man-driven vehicle:

Here, we have a short acceleration phase, followed by the “cruising” scrolling, with the eventual deceleration as the scrolling stops. Is this the correct model? Not so much – the cruising of the physical vehicle happens because you’re constantly applying the matching pressure on the gas pedal (or let the cruise control do it for you).

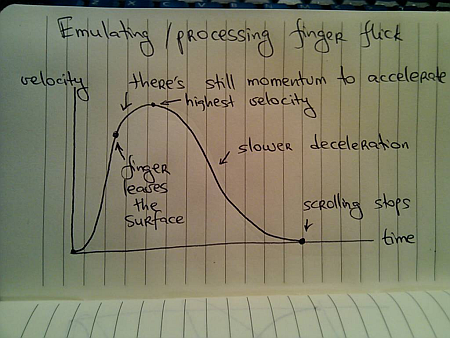

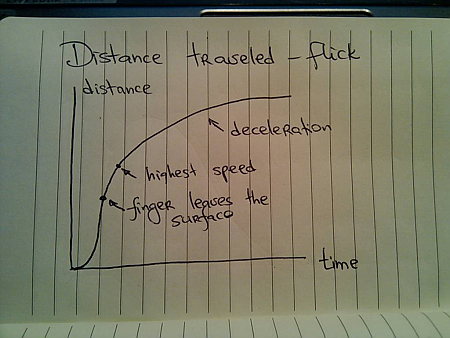

In this case, the user had a rather short interaction with the UI – clicking the button. The scrolling continues well beyond the point of the press end, and as such there is nothing that can “power” this constant-speed movement. A better physical model is that of the finger flick – a common UI idiom in touch-based devices such as smart phones or tablets:

Here, you have very quick acceleration phase as the user starts moving the finger on the UI surface, followed by a slower acceleration attributed to the momentum left by the finger. Once the scrolling reaches its maximum speed, it decelerates – at a slower rate – until the scrolling stops.

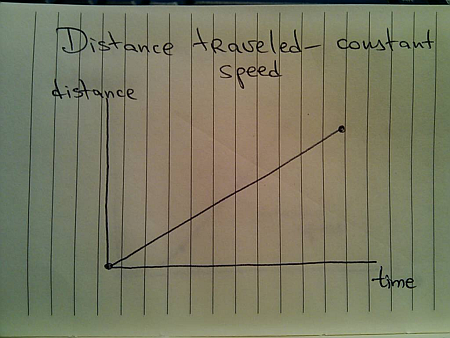

Let’s take a look at the distance graphs. Here’s the one for the constant-speed movement:

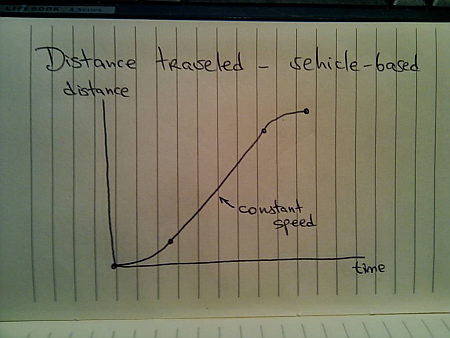

Here’s the one for the vehicle-emulating movement:

And finally, here’s the one for finger-flick model:

Now let’s take a look at what happens when the user initiates another interaction while you’re still processing (animating) the previous one. You cannot ignore such scenarios once you have animations in your UI – imagine if you wanted to go to page 200 of a book and had to turn the pages one by one. In the real world, you do not wait until the previous page has been completely turned in order to turn the next page.

The same exact principle applies to scrolling these information tiles. While you’re animating the previous scroll, the user must be able to initiate another scroll – in the same or the opposite direction. If you have read the previous entries, this directly relates to switching direction in the physical world.

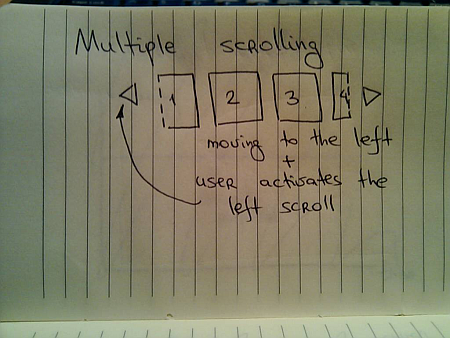

Suppose you’re in the middle of the scrolling animation:

And the user presses the left scroll button once again. You do not want to wait until the current scroll is done. Rather, you need to take the current animation and make it scroll to the new position. Here, an interesting question arises – do you scroll faster?

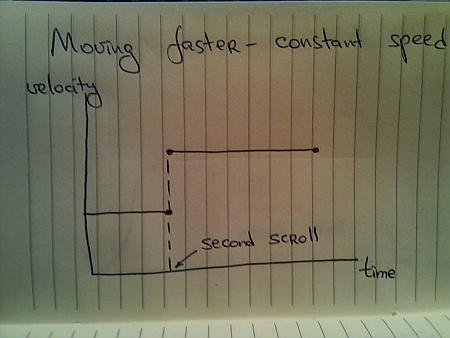

For the specific scenario that is used here – scrolling the images – you have different options. You can maintain the same scrolling speed, or you can increase the scrolling speed in order to cover more “ground” in the same amount of time. Let’s take a look at some numbers.

- Suppose it takes 1000ms to make the full scroll.

- 800ms after the scroll has started, the user initiates another scroll in the same direction.

What are the options?

- Maintain the same scroll speed, effectively completing the overall scroll in 2000ms.

- Complete the overall scroll in the same time left for the first one – effectively completing the overall scroll in 1000ms.

- Combine the distance left for the first scroll and the full distance required for the second scroll, and complete the combined distance in 1000ms – effectively completing the overall scroll in 1800ms.

There is no best solution. There is only the best solution for the specific scenario. Given the specific scenario at hand – scrolling images, i’d say that option 1 can be ruled out immediately. If the user initiates multiple scrolls, those should be combined into faster scrolls. What about option 2? It’s certainly simpler than option 1, but can result is unsettlingly fast – or almost immediate – scrolls the closer the second scroll initiation gets to the completion of the first scroll. In our case, the second scroll initiation came 200ms before the first scroll was about to be completed. Now, you cover the remaining distance and the full distance for the second scroll in only 200ms. It is going to be fast, but it can get too fast.

If we adopt the third option for this specific scenario, the velocity graph for the constant-speed scrolling looks like this:

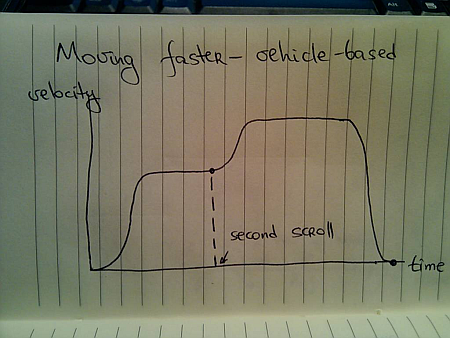

For the vehicle-based model it looks like this:

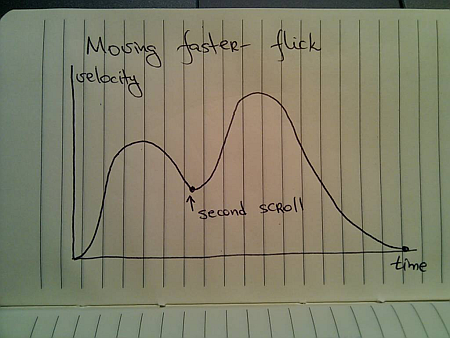

And for the finger flick based model it looks like this:

These two entries (on colors and scrolling) have shown just a glimpse of complexity brought about by adding dynamic behavior to your UIs. Is spending time on proper design and implementation of animations based on the real world justified, especially when the animations themselves are short? This is going to be the subject of the final part.

To be concluded tomorrow.

After seeing how the rules of the physical world constrain and shape movements of real objects, it’s time to turn to the pixels. State-aware UI controls that have become pervasive in the last decade are not pure eye candy. Changing the fill / border color of a button when you move the mouse cursor over it serves as the indication that the button is ready to be pressed. Consistent and subtle visual feedback of the control state plays significant role in enabling flowing and productive user experience, and color manipulation is one of the most important techniques.

Electron gun was one of my favorite technical terms in the eighties, and i remember being distinctly impressed by the fact that any visible color can be simulated with the right mix of red, green and blue phosphor cells. Somehow, mixing equal amounts of red, green and blue blobs of play-doh always left me with a brownish green bigger blob, but the pictures on the screen were certainly colored.

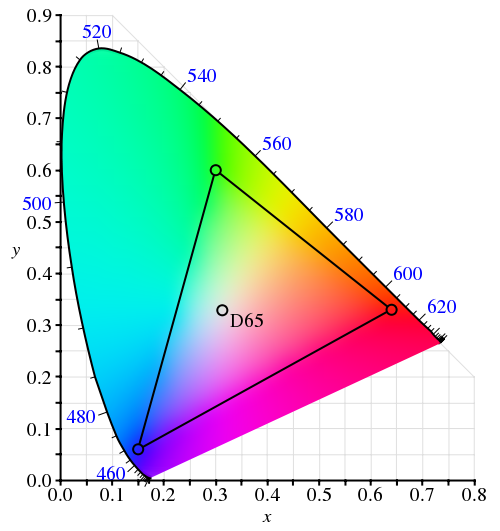

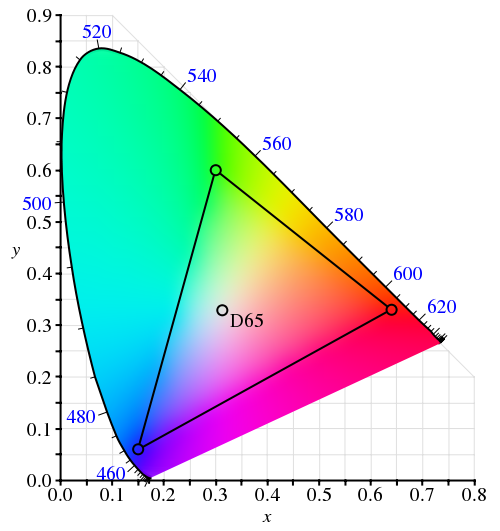

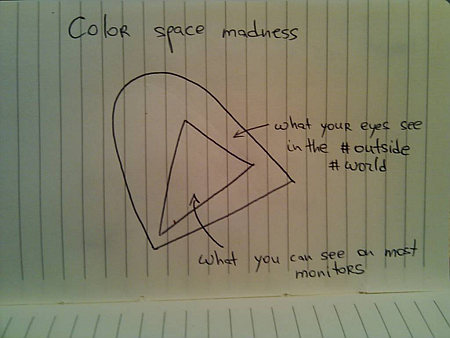

If you have a few hours to kill, color theory is a topic as endless as it gets. One of the basic terms in color theory is that of chromacity – which refers to the pure hue of the color. It is usually illustrated as a tilted horse shoe, with one of the sides representing the wavelengths of the visible spectrum (image courtesy of Wikimedia):

The problem is that average monitors (not only CRT, but LCD / plasmas as well) cannot reproduce the full visible spectrum. In color theory this is referred to as the color space – mathematical model that describes how colors can be represented by numbers. The sRGB color space is one of the most widely used for the consumer displays. Put simply, it is a triangle with red, green and blue points inside the chromacity graph (courtesy of Wikimedia):

The ironic thing about this diagram is that it cannot be faithfully shown on a display that uses the sRGB color space – since it cannot reproduce any of the colors outside the inner triangle (color space in printing is an equally lengthy subject).

Now let’s see whether the constraints of the moving physical objects are relevant for animating color pixels on the screen.

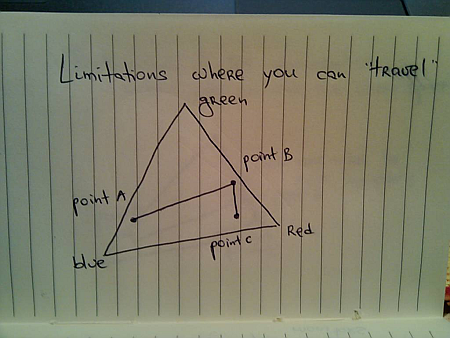

Suppose you have a button. It is displayed with light blue inner fill. When the user moves the mouse over the button (rollover), you want to animate the inner fill to yellow, and when the user presses the button, you want to animate the inner fill to saturated orange. These three colors represent anchor points of a movement path inside the specific color space:

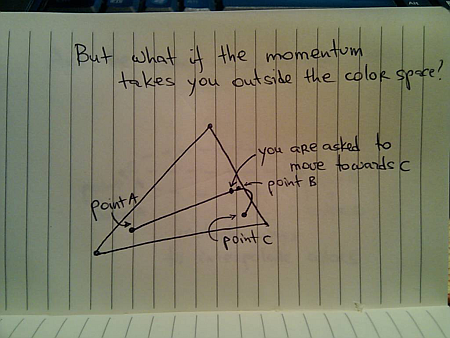

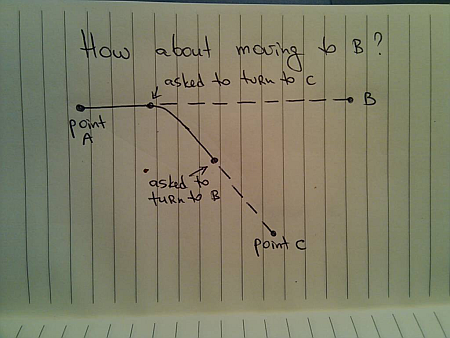

Imagine what happens when the user moves the mouse over the button, and presses it before the rollover animation has been completed. The direct analogy to the physical world is an object that was moving from point A to point B, and is now asked to turn to point C before reaching B. If the color animation reflects the momentum / inertia of the physical movement, the trajectory around point B may take the path outside the color space:

This is similar to physical world limitations – say, if point B is very close to a lake, and you don’t want to drive your new car into it. In this case you will need to clamp the interpolated color to lie inside the confines of the specific color space.

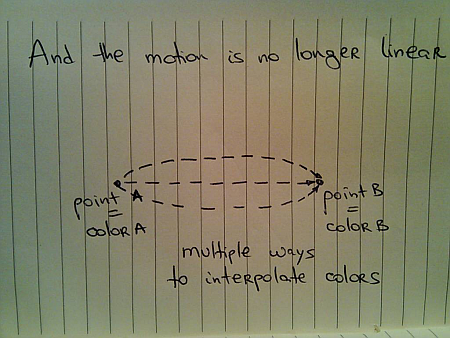

In the physical world, if you want to get from point A to point B, you would usually take the shortest route. This is not so simple if you’re interpolating colors. There’s a whole bunch of different color spaces (RGB, XYZ and HSV are just a few), and each one has its own “shortest” route that connects two colors:

Chet’s entry from last summer has a short video and a small test application that shows the difference between interpolating colors in RGB and HSV color spaces.

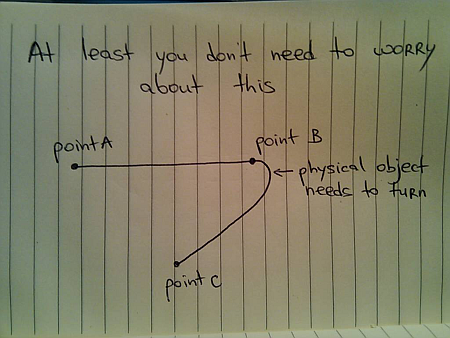

When you’re interpolating colors, the analogy to a moving physical object holds as far as the current direction, velocity and momentum. However, the moving “color object” does not have any volume – unlike the real physical objects. Thus, if it went from point A to point B (and stopped completely), and is now asked to either go back to A or go to point C, it does not need to turn – compare this to the case above when it was asked to turn to C while it was still moving towards B.

However, the rules of momentum still apply while the animation is still in progress. Suppose you’re painting your buttons with light blue color (point A) when they are inactive, and with yellow color (point B) when the mouse is over them. The user moves the mouse over one of the buttons and it starts animating – going from point A to point B. Now the user moves the mouse away from the button as it is still animating – and you want to go back to point A. If you want to follow the rules of physical movement, you cannot immediately go back from your current color back to the light blue. Rather, you need to follow the current momentum towards the yellow color, decelerate and only then go back to light blue.

Next, i’m going to talk about layout animations.

To be continued tomorrow.

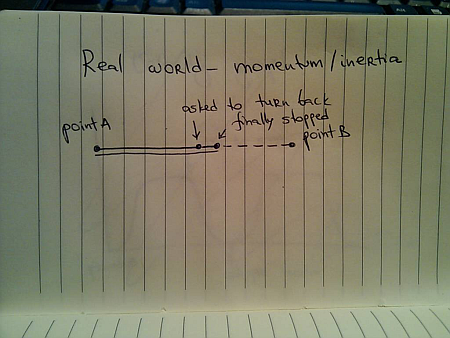

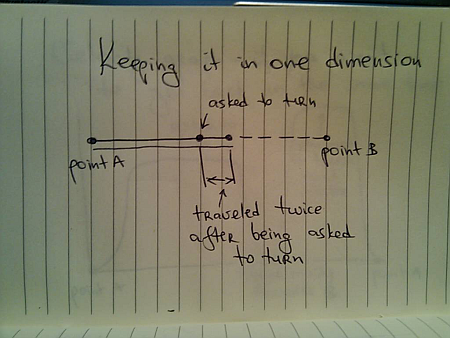

After looking at movement paths involving three points, let’s go back to the case of two points:

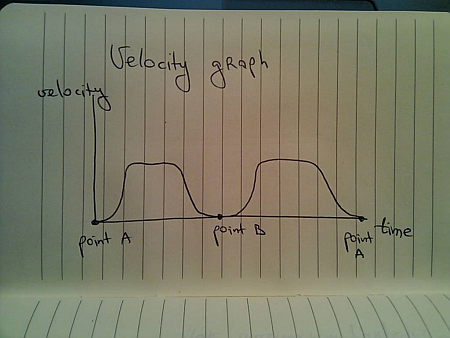

Now, once you arrive at B you are asked to go back to A. A straightforward solution would look like this:

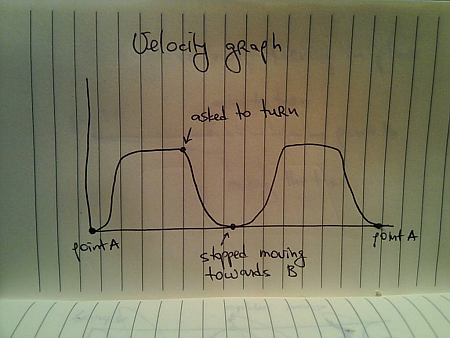

With the velocity graph for the overall travel:

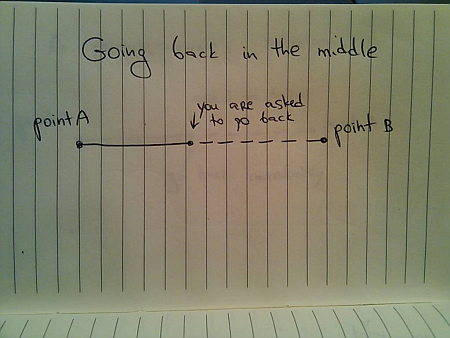

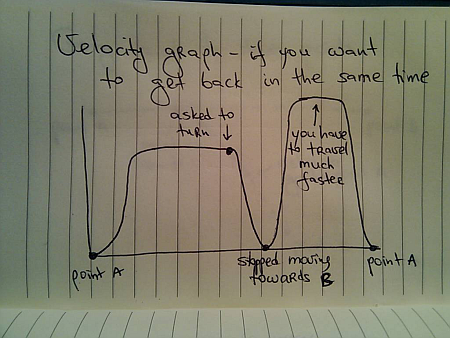

What about being asked to go back to A before you get to B?

Extending the previous solution, the first approach would be

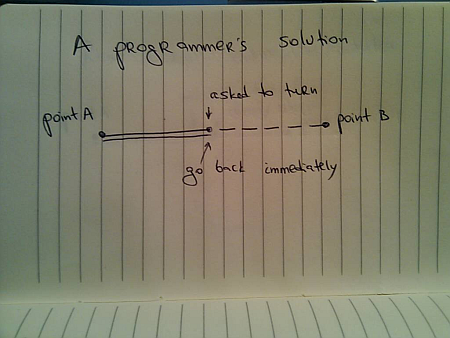

However, just as in the previous entry (with three points), when you are asked to change direction while you’re still moving, you need to account for your current momentum /inertia. In this case, it will take you some time to slow down – while still going towards B, and then you can start moving back to A:

And the corresponding velocity graph looks like this:

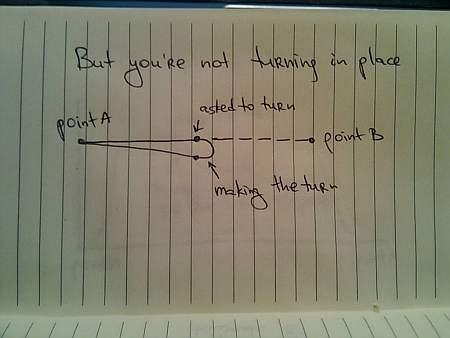

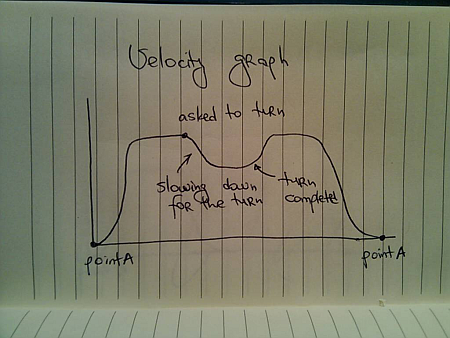

The movement paths in the previous entry remind us that the moving body is in most cases not symmetric around the Z axis, and so needs to take an actual turn – unless it’s a train bogie with driver cabs on both sides:

And the velocity graph with the turn does not have a zero-velocity point in the middle:

Going back to the case where you don’t need to take the turn (train bogie or other examples that will be discussed in the next installments), notice that you’re travelling more after being asked to turn back:

If X time units have passed since you left A and until you were asked to turn, and you are asked to get back to A in the same X time units, this means that you will need to travel faster:

with the actual speed depending on how much does it take you to decelerate once you’ve been asked to go back, and how much distance do you have to cover before getting to A.

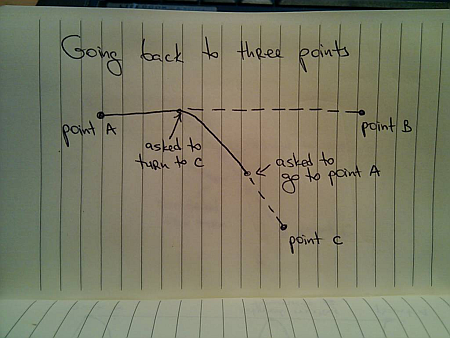

Now, let’s extend this example to three points:

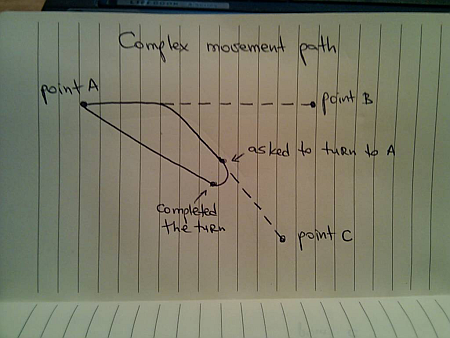

Here, you were asked to go to C while you were moving towards B, and are asked to change direction once again as you are moving towards C. The movement path will include you making a right turn towards A – creating a complex two-dimensional trajectory:

What if you’re asked to move to B instead?

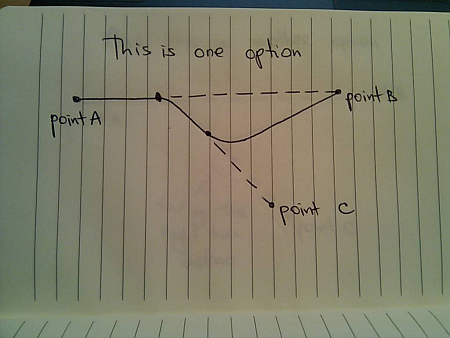

In this case you’re going to take a left turn towards B:

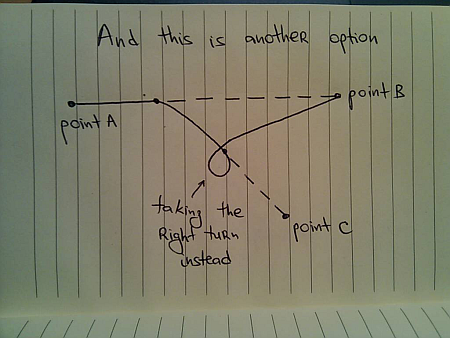

Or, if you’re a big fan of right turns (or have a problem with your steering wheel), you can take the right turn:

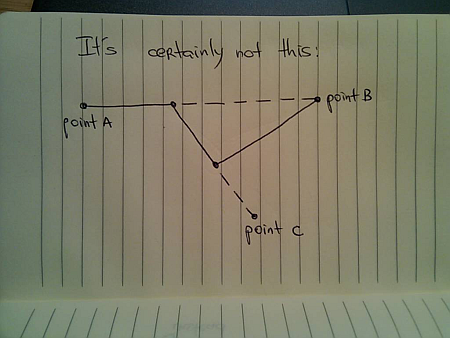

In any case, the real world does not have segmented paths

All the examples in this series have talked about everyday movements of physical objects. Next, i’m going to talk how the same principles apply to the objects and entities displayed on the screen.

To be continued tomorrow.

In a roundabout way, this brings me to the visual clues that are pervasive throughout the Avatar movie. If you haven’t seen it yet, you may want to stop reading – but i’m not going to reveal too much. Our first exposure to the Neytiri – the native Na’vi – is around half an hour into the movie. Apart from saving Jake’s life, she is quite hostile, and she does not hide it from him. What i find interesting is how James Cameron has decided to highlight her hostility. It is not only through her words and acts, but also through the body language, the hand movements and the facial expressions. They are purposefully inhuman – in the “not regularly seen done by humans” sense of word. The way she breaks the sentences and moves her upper body with the words, the movement of facial muscles when she tells him that he doesn’t belong there, and the hand gestures used throughout their first encounter certainly do not make the audience relate to her. On the contrary, the first impression reinforced by her physical attitude is that of hostility, savageness and animosity.

In a roundabout way, this brings me to the visual clues that are pervasive throughout the Avatar movie. If you haven’t seen it yet, you may want to stop reading – but i’m not going to reveal too much. Our first exposure to the Neytiri – the native Na’vi – is around half an hour into the movie. Apart from saving Jake’s life, she is quite hostile, and she does not hide it from him. What i find interesting is how James Cameron has decided to highlight her hostility. It is not only through her words and acts, but also through the body language, the hand movements and the facial expressions. They are purposefully inhuman – in the “not regularly seen done by humans” sense of word. The way she breaks the sentences and moves her upper body with the words, the movement of facial muscles when she tells him that he doesn’t belong there, and the hand gestures used throughout their first encounter certainly do not make the audience relate to her. On the contrary, the first impression reinforced by her physical attitude is that of hostility, savageness and animosity. Na’vi look remarkably humanoid – just a little taller. The only outer difference is the tail and the number of fingers. Other than that – a funky (but not too funky) skin color, the same proportion of head / limbs / torso, the same facial features, no oozing slime and the same places where the hair doesn’t grow. It has certain technical advantages – mapping the movements of real actors onto the Na’vi bodies – including the facial expressions. In Avatar, however, there is a much deeper story behind the facial expressions. Cameron starts building on your prior experience with outward expression of human emotion in order to build your empathy towards Na’vi cause, and make you root for them in the final battle scene. How likely is it that Na’vis have developed not only the same body structure, but the same way positive emotions are reflected with facial expressions?

Na’vi look remarkably humanoid – just a little taller. The only outer difference is the tail and the number of fingers. Other than that – a funky (but not too funky) skin color, the same proportion of head / limbs / torso, the same facial features, no oozing slime and the same places where the hair doesn’t grow. It has certain technical advantages – mapping the movements of real actors onto the Na’vi bodies – including the facial expressions. In Avatar, however, there is a much deeper story behind the facial expressions. Cameron starts building on your prior experience with outward expression of human emotion in order to build your empathy towards Na’vi cause, and make you root for them in the final battle scene. How likely is it that Na’vis have developed not only the same body structure, but the same way positive emotions are reflected with facial expressions?