It gives me great pleasure to announce the seventh major release of Radiance. Let’s get to what’s been fixed, and what’s been added. First, I’m going to use emojis to mark different parts of it like this:

💔 marks an incompatible API / binary change

🎁 marks new features

🔧 marks bug fixes and general improvements

Dependencies for core libraries

- Gradle from 7.1 to 7.2

- Kotlin from 1.5.10 to 1.5.31

- Kotlin coroutines from 1.5.0 to 1.5.2

Neon

Substance

Flamingo

- 🎁💔 Add reference to the ribbon as a parameter to all

OnShowContextualMenuListener methods

- 🎁💔 Align icon theming across all Flamingo components

- 🔧 Fix layout of command buttons in

TILE layout under RTL

- 🔧 Fix visuals of horizontal command button strips under RTL

- 🔧 Fix layout of anchored command buttons under RTL

- 🔧 Fix layout of command button popup content under RTL

- 🔧 Fix issues with updating ribbon gallery content

Photon

- 💔 Remove

SvgBatikIcon and SvgBatikNeonIcon

- 💔 Move Photon to be under

tools

General

As with the earlier release 4.0.0, this release has mostly been focused on stabilizing and improving the overall API surface of the various Radiance modules. As always, I’d love for you to take this Radiance release for a spin. Click here to get the instructions on how to add Radiance to your builds. And don’t forget that all of the modules require Java 9 to build and run.

And now for the next big thing or two.

I will take the next two weeks to fix any bugs or regressions that are reported on the 4.5.0 release. On the week of October 18th, all Radiance modules are going to undergo a major refactoring. While Radiance unified all of the Swing projects that I’ve been working on since around 2004, this unification was rather superficial. It made it easier to have inter-module dependencies. It made it easier to write documentation. It made it easier to schedule coordinated releases. But it didn’t make it easier to see what Radiance is.

In the last year or so I kept on asking myself the same questions over and over again.

If I started with these libraries today, will they still be using these disjointed codenames (Neon, Trident, Substance, Flamingo, not to talk about Torch, Apollo, Zodiac, Meteor, Ember, Plasma, Spyglass, Beacon, etc)? For somebody who wants to deep dive into the implementation details, are there places that are internally inconsistent? For an app developer who wants to get the most out of these libraries, does Radiance provide an externally approachable and consistent set of APIs?

The first step I’m taking to answer at least some of these questions is moving away from the codenames, and renaming everything based on the functional boundaries. And by everything I mean everything – modules, classes, methods, fields, variables, etc. It’s going to be a huge breaking change. But it’s something that I feel is way overdue for a project of this complexity. More specifically:

org.pushingpixels.trident -> org.pushingpixels.radiance.animationorg.pushingpixels.neon -> org.pushingpixels.radiance.commonorg.pushingpixels.substance -> org.pushingpixels.radiance.themingorg.pushingpixels.flamingo -> org.pushingpixels.radiance.componentorg.pushingpixels.substance.extras -> org.pushingpixels.radiance.theming.extrasorg.pushingpixels.ember -> org.pushingpixels.radiance.theming.ktxorg.pushingpixels.meteor -> org.pushingpixels.radiance.swing.ktxorg.pushingpixels.plasma -> org.pushingpixels.radiance.component.ktxorg.pushingpixels.torch -> org.pushingpixels.radiance.animation.ktxorg.pushingpixels.tools.apollo -> org.pushingpixels.radiance.tools.schemeeditororg.pushingpixels.tools.beacon -> org.pushingpixels.radiance.tools.themingdebuggerorg.pushingpixels.tools.hyperion -> org.pushingpixels.radiance.tools.shapereditororg.pushingpixels.tools.ignite -> org.pushingpixels.radiance.tools.svgtranscoder.gradleorg.pushingpixels.tools.lightbeam -> org.pushingpixels.radiance.tools.lafbenchmarkorg.pushingpixels.tools.photon -> org.pushingpixels.radiance.tools.svgtranscoderorg.pushingpixels.tools.zodiac -> org.pushingpixels.radiance.tools.screenshot

Classes that used codenames, such as SubstanceLookAndFeel, TridentConfig etc will be renamed to follow the functionality of the matching API sub-surface. For example:

SubstanceCortex -> RadianceLafCortexTridentCortex -> RadianceAnimationCortexSubstanceButtonUI -> RadianceButtonUI

This first round of refactoring will be the next Radiance release. It will not move classes between modules. It will not add or remove modules, classes or methods. Migrating from 4.5 to 5.0 will require a lot of import refactoring, and some amount of refactoring – wherever you are calling Radiance APIs in your code. Once 5.0 is out, the next release will have follow-up refactorings for cleaning up places that have not aged well.

What’s the other big thing that I alluded to earlier? I want to provide support for consistent application of custom visuals across all supported Swing components. In Substance, this is done with painters. Due to a complicated nature of some of these painters, pretty much since the very beginning Substance has been using cached off-screen bitmaps to maintain a good performance footprint. The very first time a component needs to be rendered in a certain visual state, Substance renders those visuals to an offscreen bitmap. Next time, if we already have a cached bitmap that matches the current state, we reuse it by rendering that bitmap on the screen.

While this model has served Substance (and, by extension, the Flamingo components) rather well, it has started to show significant cracks over the last few years. You can see more information in this bug tracker on the underlying issues, but the gist of it is rather simple – screens with fractional DPI settings (125% or 150%, for example) do not play well with rendering offscreen bitmaps. The end result is that rendering a hairline (one-pixel wide) element can be fuzzy, distorted, or not there at all on the screen.

It is going to be a long road, and it might mean that it might take longer than usual to get the next Radiance release out the door. My current goal is that by the end of it, Radiance does not use any offscreen bitmaps for any of its rendering, and that everything is rendered directly onto the passed graphics object. Lightbeam will certainly come in handy all through that process. Wait, excuse me, Lightbeam will be no more in a couple weeks. It’s going to be Radiance LAF Benchmark instead.

In the past year or so I’ve been working on a new project. Aurora is a set of libraries for building Compose Desktop apps, taking most of the building blocks from Radiance. I don’t have a firm date yet for when the first release of Aurora will be available, but in the meanwhile I want to talk about something I’ve been playing with over the last few weeks.

Skia is a library that serves as the graphics engine for Chrome, Android, Flutter, Firefox and many other popular platforms. It has also been chosen by Jetbrains as the graphics engine for Compose Desktop. One of the more interesting parts of Skia is SkSL – Skia’s shading language – that allows writing fast and powerful fragment shaders. While shaders are usually associated with rendering complex scenes in video games and CGI effects, in this post I’m going to show how I’m using Skia shaders to render textured backgrounds for desktop apps.

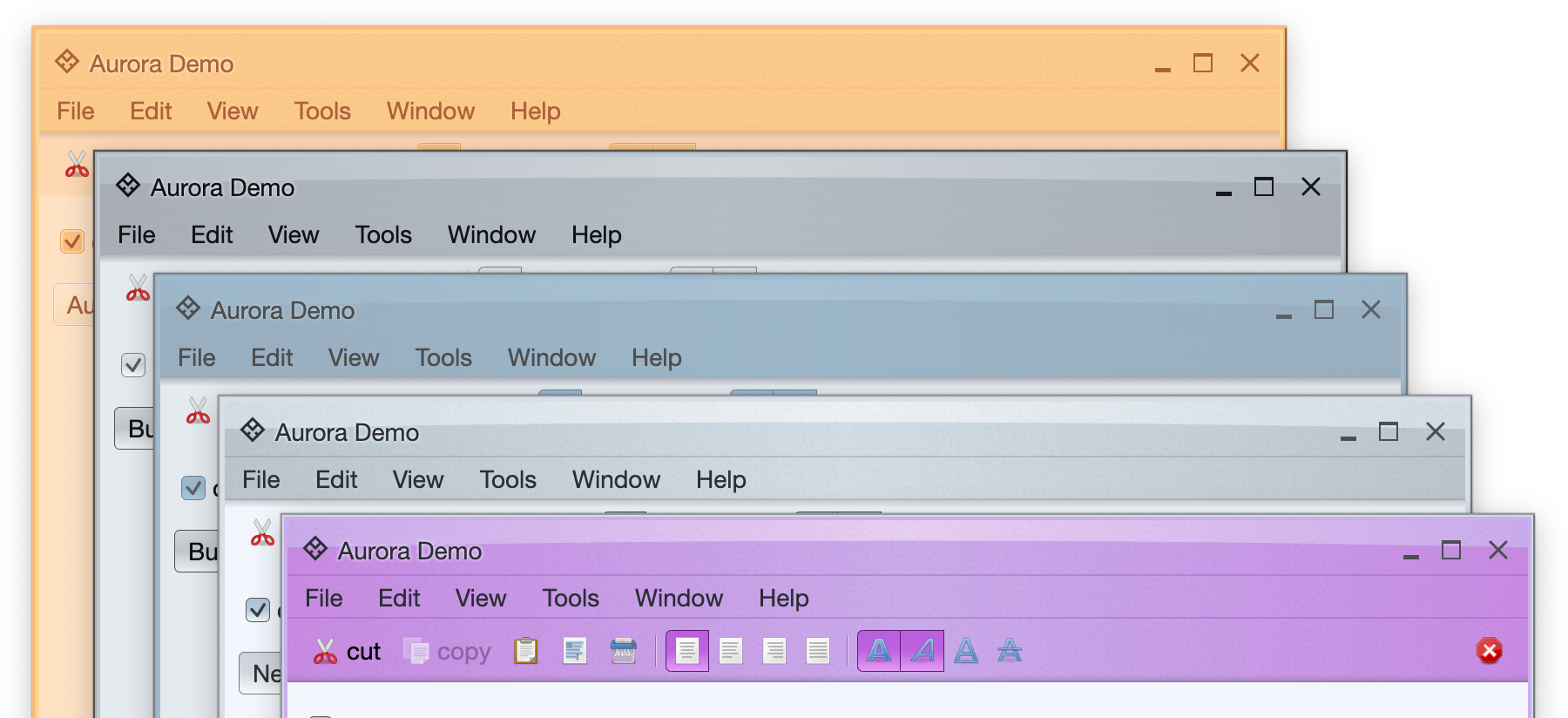

First, let’s start with a few screenshots:

Here we see the top part of a sample demo frame under five different Aurora skins (from top to bottom, Autumn, Business, Business Blue Steel, Nebula, Nebula Amethyst). Autumn features a flat color fill, while other four have a horizontal gradient (darker at the edges, lighter in the middle) overlaid with an curved arc along the top edge. If you look closer, all five also feature something else – a muted texture that spans the whole colored area.

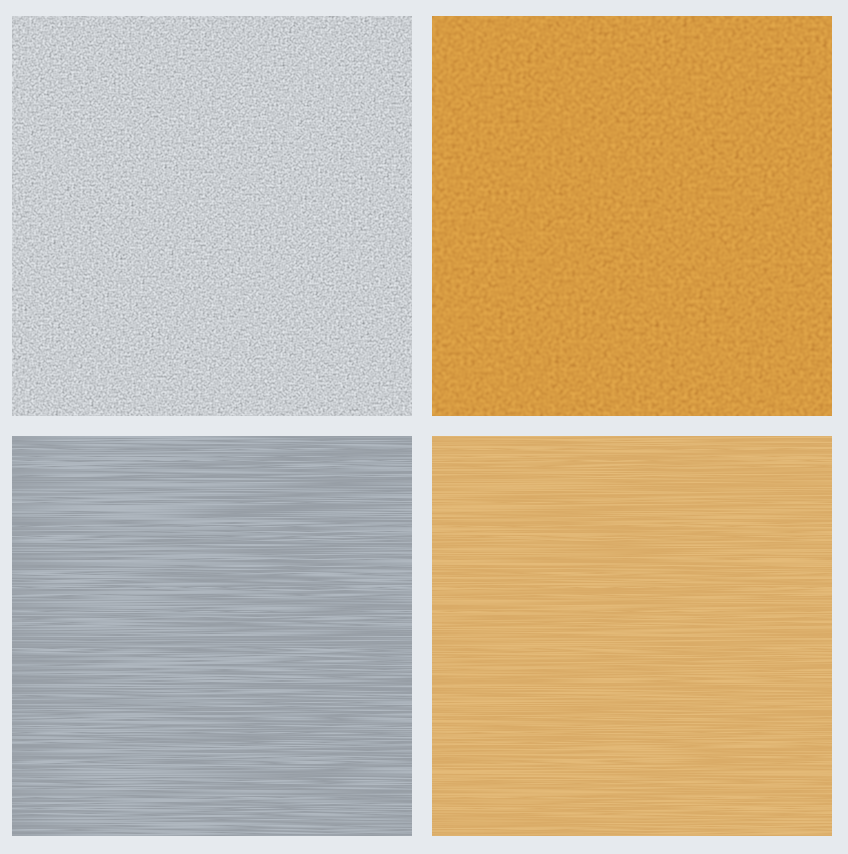

Let’s take a look at another screenshot:

Top row shows a Perlin noise texture, one in greyscale and one in orange. Bottom row shows a brushed metal texture, one in greyscale and one in orange.

Let’s take a look at how to create these textures with Skia shaders in Compose Desktop.

First, we start with Shader.makeFractalNoise that wraps SkPerlinNoiseShader::MakeFractalNoise:

// Fractal noise shader

val noiseShader = Shader.makeFractalNoise(

baseFrequencyX = baseFrequency,

baseFrequencyY = baseFrequency,

numOctaves = 1,

seed = 0.0f,

tiles = emptyArray()

)

Next, we have a custom duotone SkSL shader that computes luma (brightness) of each pixel, and uses that luma to map the original color to a point between two given colors (light and dark):

// Duotone shader

val duotoneDesc = """

uniform shader shaderInput;

uniform vec4 colorLight;

uniform vec4 colorDark;

uniform float alpha;

half4 main(vec2 fragcoord) {

vec4 inputColor = shaderInput.eval(fragcoord);

float luma = dot(inputColor.rgb, vec3(0.299, 0.587, 0.114));

vec4 duotone = mix(colorLight, colorDark, luma);

return vec4(duotone.r * alpha, duotone.g * alpha, duotone.b * alpha, alpha);

}

"""

This shader gets four inputs. The first is another shader (which will be the fractal noise that we’ve created earlier). The next two are two colors, and the last one is alpha (for applying partial translucency).

Now we create a byte buffer to pass our colors and alpha to this shader:

val duotoneDataBuffer = ByteBuffer.allocate(36).order(ByteOrder.LITTLE_ENDIAN)

// RGBA colorLight

duotoneDataBuffer.putFloat(0, colorLight.red)

duotoneDataBuffer.putFloat(4, colorLight.green)

duotoneDataBuffer.putFloat(8, colorLight.blue)

duotoneDataBuffer.putFloat(12, colorLight.alpha)

// RGBA colorDark

duotoneDataBuffer.putFloat(16, colorDark.red)

duotoneDataBuffer.putFloat(20, colorDark.green)

duotoneDataBuffer.putFloat(24, colorDark.blue)

duotoneDataBuffer.putFloat(28, colorDark.alpha)

// Alpha

duotoneDataBuffer.putFloat(32, alpha)

And create our duotone shader with RuntimeEffect.makeForShader (a wrapper for SkRuntimeEffect::MakeForShader) and RuntimeEffect.makeShader (a wrapper for SkRuntimeEffect::makeShader):

val duotoneEffect = RuntimeEffect.makeForShader(duotoneDesc)

val duotoneShader = duotoneEffect.makeShader(

uniforms = Data.makeFromBytes(duotoneDataBuffer.array()),

children = arrayOf(noiseShader),

localMatrix = null,

isOpaque = false

)

With this shader, we have two options to fill the background of a Compose element. The first one is to wrap Skia’s shader in Compose’s ShaderBrush and use drawBehind modifier:

val brush = ShaderBrush(duotoneShader)

Box(modifier = Modifier.fillMaxSize().drawBehind {

drawRect(

brush = brush, topLeft = Offset(100f, 65f), size = Size(400f, 400f)

)

})

The second option is to create a local Painter object, use DrawScope.drawIntoCanvas block in the overriden DrawScope.onDraw, get the native canvas with Canvas.nativeCanvas and call drawPaint on the native (Skia) canvas directly with the Skia shader we created:

val shaderPaint = Paint()

shaderPaint.setShader(duotoneShader)

Box(modifier = Modifier.fillMaxSize().paint(painter = object : Painter() {

override val intrinsicSize: Size

get() = Size.Unspecified

override fun DrawScope.onDraw() {

this.drawIntoCanvas {

val nativeCanvas = it.nativeCanvas

nativeCanvas.translate(100f, 65f)

nativeCanvas.clipRect(Rect.makeWH(400f, 400f))

nativeCanvas.drawPaint(shaderPaint)

}

}

}))

What about the brushed metal texture? In Aurora it is generated by applying modulated sine / cosine waves on top of the Perlin noise shader. The relevant snippet is:

// Brushed metal shader

val brushedMetalDesc = """

uniform shader shaderInput;

half4 main(vec2 fragcoord) {

vec4 inputColor = shaderInput.eval(vec2(0, fragcoord.y));

// Compute the luma at the first pixel in this row

float luma = dot(inputColor.rgb, vec3(0.299, 0.587, 0.114));

// Apply modulation to stretch and shift the texture for the brushed metal look

float modulated = abs(cos((0.004 + 0.02 * luma) * (fragcoord.x + 200) + 0.26 * luma)

* sin((0.06 - 0.25 * luma) * (fragcoord.x + 85) + 0.75 * luma));

// Map 0.0-1.0 range to inverse 0.15-0.3

float modulated2 = 0.3 - modulated / 6.5;

half4 result = half4(modulated2, modulated2, modulated2, 1.0);

return result;

}

"""

val brushedMetalEffect = RuntimeEffect.makeForShader(brushedMetalDesc)

val brushedMetalShader = brushedMetalEffect.makeShader(

uniforms = null,

children = arrayOf(noiseShader),

localMatrix = null,

isOpaque = false

)

And then passing the blur shader as the input to the duotone shader:

val duotoneEffect = RuntimeEffect.makeForShader(duotoneDesc)

val duotoneShader = duotoneEffect.makeShader(

uniforms = Data.makeFromBytes(duotoneDataBuffer.array()),

children = arrayOf(brushedMetalShader),

localMatrix = null,

isOpaque = false

)

The full pipeline for generating these two Aurora textured shaders is here, and the rendering of textures is done here.

What if we want our shaders to be dynamic? First let’s see a couple of videos:

The full code for these two demos can be found here and here.

The core setup is the same – use Runtime.makeForShader to compile the SkSL shader snippet, pass parameters with RuntimeEffect.makeShader, and then use either ShaderBrush + drawBehind or Painter + DrawScope.drawIntoCanvas + Canvas.nativeCanvas + Canvas.drawPaint. The additional setup involved is around dynamically changing one or more shader attributes based on time (and maybe other parameters) and using built-in Compose reactive flow to update the pixels in real time.

First, we set up our variables:

val runtimeEffect = RuntimeEffect.makeForShader(sksl)

val shaderPaint = remember { Paint() }

val byteBuffer = remember { ByteBuffer.allocate(4).order(ByteOrder.LITTLE_ENDIAN) }

var timeUniform by remember { mutableStateOf(0.0f) }

var previousNanos by remember { mutableStateOf(0L) }

Then we update our shader with the time-based parameter:

val timeBits = byteBuffer.clear().putFloat(timeUniform).array()

val shader = runtimeEffect.makeShader(

uniforms = Data.makeFromBytes(timeBits),

children = null,

localMatrix = null,

isOpaque = false

)

shaderPaint.setShader(shader)

Then we have our draw logic

val brush = ShaderBrush(shader)

Box(modifier = Modifier.fillMaxSize().drawBehind {

drawRect(

brush = brush, topLeft = Offset(100f, 65f), size = Size(400f, 400f)

)

})

And finally, a Compose effect that syncs our updates with the clock and updates the time-based parameter:

LaunchedEffect(null) {

while (true) {

withFrameNanos { frameTimeNanos ->

val nanosPassed = frameTimeNanos - previousNanos

val delta = nanosPassed / 100000000f

if (previousNanos > 0.0f) {

timeUniform -= delta

}

previousNanos = frameTimeNanos

}

}

}

Now, on every clock frame we update the timeUniform variable, and then pass that newly updated value into the shader. Compose detects that a variable used in our top-level composable has changed, recomposes it and redraws the content – essentially asking our shader to redraw the relevant area based on the new value.

Stay tuned for more news on Aurora as it is getting closer to its first official release!

Notes:

- Multiple texture reads are expensive, and you might want to force such paths to draw the texture to an

SkSurface and read its pixels from an SkImage.

- If your shader does not need to create an exact, pixel-perfect replica of the target visuals, consider sacrificing some of the finer visual details for performance. For example, a large horizontal blur that reads 20 pixels on each “side” as part of the convolution (41 reads for every pixel) can be replaced by double or triple invocation of a smaller convolution matrix, or downscaling the original image, applying a smaller blur and upscaling the result.

- Performance is important as your shader (or shader chain) runs on every pixel. It can be a high-resolution display (lots of pixels to process), a low-end GPU, a CPU-bound pipeline (no GPU), or any combination thereof.

Continuing the ongoing series of interviews on fantasy user interfaces, it’s my pleasure to welcome Rhys Yorke. In this interview he talks about concept design, keeping up with advances in consumer technology and viewers’ expectations, breaking away from the traditional rectangles of pixels, the state of design software tools at his disposal, and his take on the role of technology in our daily lives. In between these and more, Rhys talks about his work on screen graphics for “The Expanse”, its warring factions and the opportunities he had to work on a variety of interfaces for different ships.

Kirill: Please tell us about yourself and the path that took you to where you are today.

Kirill: Please tell us about yourself and the path that took you to where you are today.

Rhys: My background is pretty varied. I was in the military, I’ve worked as a computer technician, I’ve worked as a programmer, I’ve worked as a comic book artist, I’ve worked in video games, I’ve done front end web development, I’ve done design for web and mobile, I’ve worked in animation, and now film and TV.

It’s been a long winding road, and I find it interesting. I continue to draw on a lot of those varied experiences in video games and comic books, but also from the military as I’ve worked on “G.I. Joe” and now “The Expanse” when we’re doing large ship battles. It’s an interesting, and it’s a bit weird to think of how I got where I am now. It wasn’t something necessarily planned, but I just adapted to the times.

Kirill: Do you think that our generation was lucky enough to have this opportunity to experience the beginning of the digital age, to have access to these digital tools that were not available before? I don’t even know what I’d be doing if I was born 300 years ago.

Rhys: My first computer was Commodore 64. My dad brought that home when I was eight years old. He handed me the manual and walked away, and I hooked it up and started typing the programs in Basic. Certainly it’s not like today when my son started using an iPhone when he was two and could figure it out, but at the same time it’s not something that we shied away from.

I feel like we probably are unique in that we’ve had the opportunity to see what interfacing with machines and devices were like prior to the digital age, as well as deep into the digital age where we currently are. You look at rotary phones and even television sets, and how vastly different is the way we interact with them. We’ve been fortunate enough to see how that’s evolved. It does put us in a unique situation.

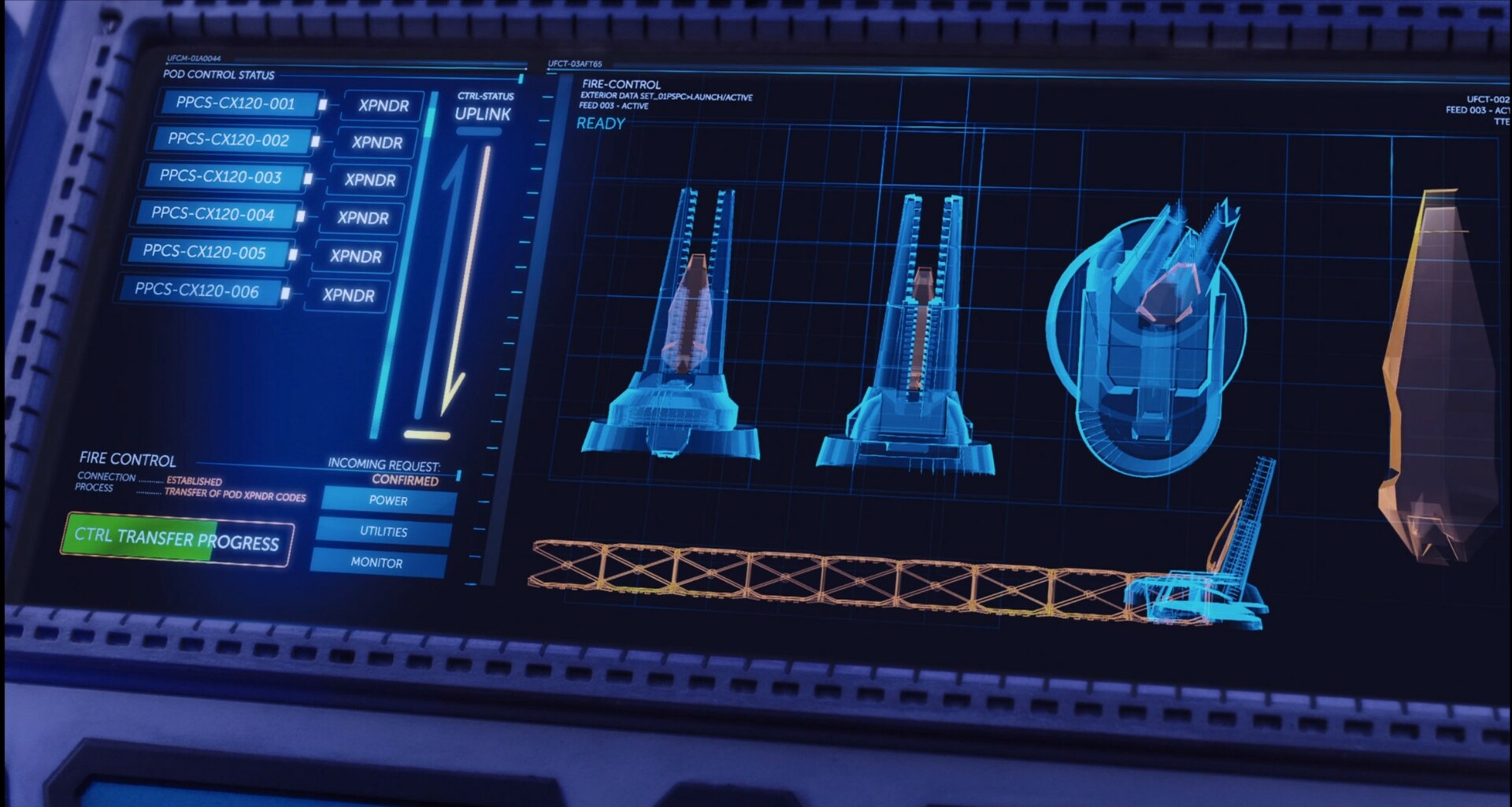

Screen graphics of the Agatha King, “The Expanse”, by Rhys Yorke

Kirill: If I look at your web presence on your website and Instagram, you say you are a concept artist. When you meet somebody at a party, how difficult or easy is it to explain what it is?

Rhys: I even have that trouble of explaining what a concept artist does with my family too. I refer to it sometimes more as concept design and boil it down to as I design things. I’ll say that for instance on “The Expanse” I’ll design the interfaces that the actors use that appear on the ships. Or that I’ll design environments or props that appear in a show. People seem to understand more when you talk about what a designer does, as opposed to the title of a concept artist.

Unless somebody is into video games or specifically into the arts field, concept artist is still a title that is a bit of a mystery for most people.

Kirill: It also looks like you do not limit yourself, if you will, to one specific area. Is that something that keeps you from getting too bored with one area of digital design?

Rhys: I find it’s a challenge and a benefit as well when people look at my portfolio. I have things ranging from cartoony to highly realistic, rendered environments. I do enjoy a balance of that.

I enjoy the freedom of what the stylized art allows. Sometimes I feel that you have the opportunity to be a little bit more creative and to take a few more risks. And on the other hand, the realistic stuff poses a large technical hurdle to overcome. From the technical standpoint, it’s challenging to create things that look like they should exist in the real world. And it’s a different challenge than it would be to create something that’s completely stylized, something that requires the viewer to suspend their disbelief.

These experiences feed into each other. I’m working in animation, and then I’ll take that experience and apply it to the stuff on “The Expanse”. I do enjoy not being stuck into a particular area, so I try to adapt. If someone will ask “What’s your own style?” I’ll say that it’s a bit fluid.

Screen graphics of the Agatha King, “The Expanse”, by Rhys Yorke

Continue reading »

Continuing the ongoing series of interviews with creative artists working on various aspects of movie and TV productions, it is my pleasure to welcome Jeriana San Juan. In this interview she talks about working at the intersection of Hollywood and fashion, differences and similarities between fashion design and costume design, doing research in the digital world, and keeping up with the ever-increasing demands of productions and viewers’ expectations. Around these topics and more, Jeriana dives into her work on the recently released “Halston”.

Jeriana San Juan

Kirill: Please tell us about yourself and the path that took you to where you are today.

Jeriana: My name is Jeriana San Juan, and my entry into this business began when I was very young. I was dazzled by movies that I would watch as a child. I watched a lot of older movies and Hollywood classics. It was the likes of “American in Paris” and “The Red Shoes”, and other musicals from 1940s and 50s. That was the beginning into feeling immersed and absorbed into fantasy, and I wanted to be a part of that.

I loved in particular the costumes, and how they helped tell the story, or how they helped the women look more glamorous, or created a whole story within the story. Those were the things I was attracted to.

From a young age I was raised by my grandmother, and she was a seamstress and a dressmaker. She saw that I loved the magic of what I was watching on screen, and also that I loved clothes myself. I loved fashion, magazines and stores, and she helped initiate that education for me. She would show me how to create clothes from fabric and how to start manifesting things that were in my imagination. So I credit her with that.

But I didn’t know it could be a career [laughs], to specifically costume design. I thought I wanted to be a fashion designer, because I knew that could be a real job that I could have when I grew up. And as I grew up, I learned that my impulses were more of a costume designer than a fashion designer, and so I moved into that arena later on in my career.

Kirill: Where do you draw the line between the two? Is there such a line between being a costume designer and a fashion designer?

Kirill: Where do you draw the line between the two? Is there such a line between being a costume designer and a fashion designer?

Jeriana: There are two different motivations with costume design and fashion design. Fashion design, to me, can be complete storytelling, but you make up the story as you design it. Costume design is storytelling with the motivation of a specific character, a specific point of view, and a specific story to tell.

My impulses in clothing are through more character-driven costume design and story-driven costume design. That’s my inclination. I feel that there are some bones in me that very much still are the bones of a fashion designer, and to me it’s not completely mutually exclusive. Those two mindsets can exist in one person.

I look back at those old 1950s movies that were designed by William Travilla and Edith Head and so many other great names, and those Hollywood costume designers also had fashion lines. Adrian had a boutique in Los Angeles and people would go there to look like movie stars, and he also designed movies. So in my brain, I’ve always felt like there’s a duality in my creativity that can lend itself to both angles.

Kirill: You are at an intersection of two rather glamorous fields, Hollywood and fashion, and yet probably there’s a lot of “unglamorous” parts of your work day. Was it any surprise to you when you started working in the field and saw how much sweat and tears goes into it?

Jeriana: Never. I come from a family of immigrants, and I’ve seen every person in my family work very hard. I never assumed anything in this life would be given to me free of charge. That’s the work ethic that I was raised with, so to me the hard work was never an issue because I’m always prepared to work hard.

Yes, it’s a very unglamorous life and career. Day to day is not glamorous at all. It’s running around, it’s 24/7 emails and phone calls, it’s rolling your sleeves up and figuring out the underside of a dress. It’s very tactile. I don’t sit in a chair and point to people what to do. It’s very much hands-on, and that never scared me at all. It excites me.

Costume design of “Halston” by Jeriana San Juan. Courtesy Netflix.

Continue reading »