Josh Lehman on the value of UX design:

A weak user experience was forgivable during a time when software was more expensive (sunk-cost fallacy in action), the competitive playing field was much more “sparse” and users unwrapped a nice shiny manual which accompanied their software acquisition. Users in this bygone era understood that they would likely need to alter themselves to meet the demands of the software. When the interface didn’t make sense the user would blame themselves and cite the fact that they “hadn’t yet learned it”.

Things are very different today. Customers now expect the softwares (apps) to meet their needs and match their usability expectations right out of the proverbial box. If you’re building a new consumer-focused app, great design and solid usability are no longer just positive differentiators or features on a checklist. A solid, usable design is part of your entry fee into the software market, giving you a chance at success. Without a solid design your app can too easily become a casualty of the new “discardable apps” phenomenon.

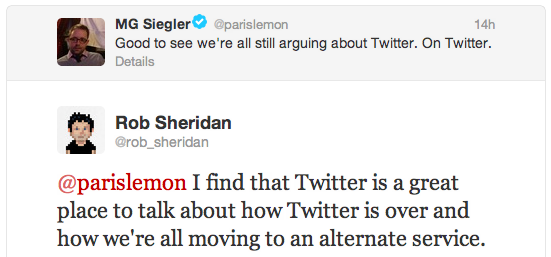

Ben Brooks on his decision to leave Twitter:

I’ve stopped posting new updates. I’m only checking it a couple times a day. And if Twitter doesn’t do an about face I’ll be done with it very quickly. I’m giving them one last chance, but also slowing my usage to a crawl — imagine the power of the entire nerd community doing this. The easiest way to making Twitter take notice, is to remove your eyeballs from their advertising, and devalue the network by reducing the size of it.

First, nerds are running third-party clients that do not show promoted tweets or ads. Second, the number of people retweeting every single Justin Bieber’s tweet is vastly more than the number of people discussing the latest API restrictions. Third, removing these eyeballs is actually improving Twitter’s bottom line, as it is reducing the strain on backends and does not hurt the current business model any single bit.

The flexibility of a human mind continues to amaze me. Somehow my kids got hooked on “Bubble guppies”, and my four-year old son identifies himself with the teacher, Mr Grouper. And yet he only smiled when I told him that I had grouper fingers for lunch on Monday. For him it’s just another external signal to observe, absorb and reconcile, even as the brightest of the humankind struggle in their feeble attempts to put intelligence in artificial intelligence.

The flexibility of a human mind continues to amaze me. Somehow my kids got hooked on “Bubble guppies”, and my four-year old son identifies himself with the teacher, Mr Grouper. And yet he only smiled when I told him that I had grouper fingers for lunch on Monday. For him it’s just another external signal to observe, absorb and reconcile, even as the brightest of the humankind struggle in their feeble attempts to put intelligence in artificial intelligence.

A work of fiction may poke fun at a grown man displaced centuries ahead of his time and his failure to adjust to the technological and societal norms, but in reality a human mind will adapt. A human body can only withstand a miniscule variation in the characteristics of the physical environment. The ability of a human mind to adapt, on the other hand, is on a completely different scale. Placed in a new environment with a new set of constraints, the human mind will skip the why am I here, and instead will probe the boundaries and say I’m here, how do I adapt and what can I do?

File systems are a given in any modern computing environment. From magnetic tapes to optical disks, from audio cassettes to flash drives, from punch cards to cloud storage, it’s hard to imagine doing anything useful without being able to partition the data into individual chunks, without being able to store, retrieve and modify them at will. Any non-trivial collection of chunks needs to be organized, in the physical world as well as in the digital one. In one form or another, folders are physical entities that create separation and grouping in the physical world. It wouldn’t take a large leap of faith to translate this concept into the digital world. A world which is not bound by the same limitations. A world in which you can put as many files as you need in a single folder. A world in which nested folders are just a natural continuation of existing ways to partition and group the data. A world in which you can create a symbolic link to a file or a folder and reference the same data from multiple places, as if you had identical copies of it. A world that continues expanding its boundaries and frees the human mind from the constraints of the physical world.

Which is why I only partially agree with Oliver Reichenstein‘s take in his “Mountain Lion’s New File System“:

The folder system paradigm is a geeky concept. Geeks built it because geeks need it. Geeks organize files all day long. Geeks don’t know and don’t really care how much their systems suck for other people. Geeks do not realize that for most people organizing documents within an operating system next to System files and applications feels like a complicated and maybe even dangerous business.

The concept itself was certainly not invented by geeks. The need to manage large volumes of heterogeneous data did not arise with the advent of computers. The pace of creating the data in pre-computer world was certainly limited by the means of production and dissemination, but digital folder systems are not much different from their physical counterparts. But extending that physical notion into the digital world had interesting consequences.

I’m here, how do I adapt and what can I do? Adapting is easy. If I know how to group related documents into one binder on my desk, and how to put labels on different binders, and how to stack binders on different shelves, and how to label different shelves, I’m all set to start organizing the matching files and folders on my computer. The evolution of file management tools has provided an increasingly expanding array of tools to copy, move, rename, split, collate and combine files across different folders. File as the basic unit of storing information has defined the way we’ve interacted with computers over the last fifty years.

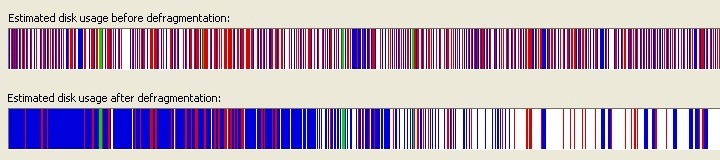

I vividly remember running a variety of disk defragmentation tools in the late 1990s. They were a necessity. Hard drives were reasonably large for documents, but on average they were not large enough to accommodate the increasing appetite for installing games and storing video files. The physical aspects of seeking sectors on rotating magnetic disks propagated all the way up to the runtime performance of the entire system, and required running defragmentation tools on the regular basis. You wanted the files to be clustered as tightly as possible to reduce the maximum seek time, and you never wanted a file to be split across different disc sectors.

There was a visceral, almost palpable pleasure to see the lines and squares (depending on the interface) to change colors and places. You could almost feel your files to snap together, you could almost feel that big video file to become one contiguous chunk, you could almost feel your computer to run faster.

This is why Vista’s defragmenter UI was such a disappointment. There is almost nothing to see, almost nothing to feel. There are passes, there is progress, but a little in the way to expose the work that is being done, the chunks of data that are being moved, torn apart and sewn back together. It’s almost as if the files are not there at all.

And what if there are no files? What would happen if that is your new boundary? What if instead of asking why, you say how do I adapt and what can I do?

What if there was no file explorer on your desktop machine, much like there is no file explorer preinstalled on your phone or tablet? What if you severed the mental connection between the visual representation of the data on your screen and the way it is encoded, transmitted and stored on a physical medium? Would you care if the pictures you have taken on the last trip to Europe were stored as one gigantic blob somewhere on your disk or in the cloud, if you were still able to view, edit and share them individually as you do today? Would you care if the document you are working on is saved as a single file, or as a binary blob in some relational database sharded and replicated across multiple cloud servers, merely represented as a single unit when you open it? And what is a single local file if not a binary blob delineated by the file system journal, and defined by its encoding?

How would you write an application using a system API that has no references to file objects, that has no specific definition of how and where each individual chunk of data is written? How would you design a system API that does not have such references? Would that make the life of system developers harder? What that make the life of application developers harder? And, more importantly, would that make the life of users easier? As long as all the sides accept the new paradigm, that is.

Indie devs keep on bitching about how they’ve helped bring Twitter to the masses and invent some of the features later adopted for official use. Stop bitching. It was a two way street. You amassed reputation, carved out a name for yourself, collected tens of thousands of followers on twitter, your blogs and your podcasts. Some clients were so popular that offers were made and acquisition deals were signed.

It was a community for a few, and then the VC money came in and they want to see a whole different kind of profit. Is it a different community than it was a few years ago? Of course it is. You grew in your popularity and influence, and Twitter did the same. It’s no more yours today than it was yours back then.

Seth Godin talks about tribes. There are two types of tribes that are relevant here. For each such indie dev, there’s his consumer tribe that is interested in following his stream. And then there is also the creator tribe encompassing like-minded devs, sparkling dialogs and conversations with threads that involve some people from their consumer tribes as well. In a sense, there is also a more amorphic meta-consumer tribe associated with that creator tribe, where people interested in following one creator are more likely to follow similarly oriented creators.

The creator tribe that I follow on Twitter is all ready to move to app.net, and yet there are only two people seriously talking about leaving Twitter. Because that creator tribe is ready to move, but not ready to lose its meta-consumer tribe. And so it stays, and keeps on bitching about staying.